搞半天获取不到数据,搞几天了,不知道是哪里出问题了

import requests

from lxml import etree

import lxml

from bs4 import BeautifulSoup

url = 'https://apic.liepin.com/api/com.liepin.searchfront4c.pc-search-job'

head = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.0.0 Safari/537.36',

'X-Client-Type': 'web',

'X-Fscp-Bi-Stat': '{"location": "https://www.liepin.com/zhaopin/?city=410&dq=410&pubTime=¤tPage=2&pageSize=40&key=python&suggestTag=&workYearCode=0&compId=&compName=&compTag=&industry=&salary=&jobKind=&compScale=&compKind=&compStage=&eduLevel=&scene=page&suggestId="}',

'X-Fscp-Std-Info': '{"client_id": "40108"}',

'X-Fscp-Trace-Id': '3dd1e997-a976-40ca-a2dc-1341c3a936f8',

'X-Fscp-Version': '1.1',

'X-Requested-With': 'XMLHttpRequest',

# 'X-XSRF-TOKEN': 'akNB8zNITCySkUDudNhWog',

}

# 0, 1, 2, 3, 4

data = {"data": {

"mainSearchPcConditionForm": {"city": "410", "dq": "410", "pubTime": "", "currentPage": "3", "pageSize": 40,

"key": "python", "suggestTag": "", "workYearCode": "0", "compId": "", "compName": "",

"compTag": "", "industry": "", "salary": "", "jobKind": "", "compScale": "",

"compKind": "", "compStage": "", "eduLevel": ""},

"passThroughForm": {"scene": "page", "ckId": "43a59xkh5vjfpt1qf4axal3q1l3scrqa"}}}

# 参考乌海市的案例

resp = requests.post(url, json=data, headers=head) # post——携带data参数

div = resp.content.decode('utf-8')

tree = etree.HTML(div)

print(tree)

lis_ = tree.xpath('//div[@class="job-list-box"]')

print(lis_)

你的 div 是一个 json 数据啊,为什么用 lxml

在你的 div 后边加一句

div = resp.content.decode('utf-8')

j = json.loads(div)

for item in j['data']['data']['jobCardList']:

print(item)

import requests

from pprint import pprint

url = 'https://apic.liepin.com/api/com.liepin.searchfront4c.pc-search-job'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.0.0 Safari/537.36',

'X-Client-Type': 'web',

'X-Fscp-Bi-Stat': '{"location": "https://www.liepin.com/zhaopin/?city=410&dq=410&pubTime=¤tPage=2&pageSize=40&key=python&suggestTag=&workYearCode=0&compId=&compName=&compTag=&industry=&salary=&jobKind=&compScale=&compKind=&compStage=&eduLevel=&scene=page&suggestId="}',

'X-Fscp-Std-Info': '{"client_id": "40108"}',

'X-Fscp-Trace-Id': '3dd1e997-a976-40ca-a2dc-1341c3a936f8',

'X-Fscp-Version': '1.1',

'X-Requested-With': 'XMLHttpRequest',

# 'X-XSRF-TOKEN': 'akNB8zNITCySkUDudNhWog',

}

# 0, 1, 2, 3, 4

payload = {"data": {

"mainSearchPcConditionForm": {"city": "410", "dq": "410", "pubTime": "", "currentPage": "3", "pageSize": 40,

"key": "python", "suggestTag": "", "workYearCode": "0", "compId": "", "compName": "",

"compTag": "", "industry": "", "salary": "", "jobKind": "", "compScale": "",

"compKind": "", "compStage": "", "eduLevel": ""},

"passThroughForm": {"scene": "page", "ckId": "43a59xkh5vjfpt1qf4axal3q1l3scrqa"}}}

# 参考乌海市的案例

resp = requests.post(url, json=payload, headers=headers) # post——携带data参数

if resp.status_code == 200:

data = resp.json()

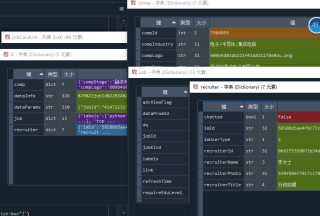

result = data.get('data').get('data').get('jobCardList')

## result 是一个列表,列表中有很多字典,通过循环就可以获取到里面的数据信息了

pprint(result)

# for item in result:

# print(item.get('job'))