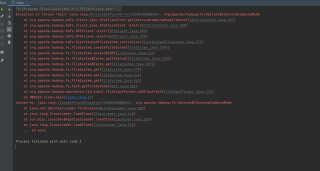

org.apache.hadoop.fs.Options$ChecksumCombineMode

救命

org.apache.hadoop.fs.Options$ChecksumCombineMode

MapReduce阶段不知道发生了什么,一直报这样一个错误,之前还能好好运行,突然就不行了

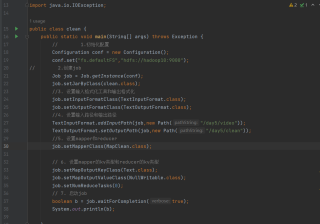

问题相关代码

package HW0820;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import java.io.IOException;

public class clean {

public static void main(String[] args) throws Exception {

// 1.初始化配置

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://hadoop10:9000");

// 2.创建job

Job job = Job.getInstance(conf);

job.setJarByClass(clean.class);

//3. 设置输入格式化工具和输出格式化

job.setInputFormatClass(TextInputFormat.class);

job.setOutputFormatClass(TextOutputFormat.class);

//4. 设置输入路径和输出路径

TextInputFormat.addInputPath(job,new Path("/day5/video"));

TextOutputFormat.setOutputPath(job,new Path("/day5/clean"));

//5. 设置mapper和reducer

job.setMapperClass(MapClean.class);

// 6. 设置mapper的kv类型和reducer的kv类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(NullWritable.class);

job.setNumReduceTasks(0);

// 7. 启动job

boolean b = job.waitForCompletion(true);

System.out.println(b);

}

static class MapClean extends MapperText,Text, NullWritable>{

@Override

protected void map(LongWritable key, Text value, MapperText, Text, NullWritable>.Context context) throws IOException, InterruptedException {

String replace = value.toString().replace(" ", "");

String[] split = replace.split("\t", 10);

String replace1 = split[split.length - 1].replace("\t", "&");

String[] split1 = replace1.split("&");

String spid="";

for (int i = 0;i<20 && i < split1.length; i++) {

if (i == split1.length-1) {

spid=spid+split1[i];

}else {

spid=spid+split1[i]+"&";

}

}

String a = "";

for (int n=0;n-1;n++) {

a+=split[n]+",";

}

String all= a+ spid;

context.write(new Text(all),NullWritable.get());

}

}

}

运行结果及报错内容

我的解答思路和尝试过的方法

试过重启、删除,拷贝别人的Maven阿帕奇下载的包都不行

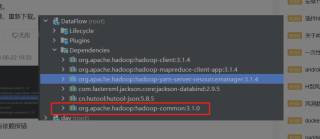

大概率就是这个依赖与其他依赖产生了冲突,题主试一下去pom文件中,更改一下配置,将这个配置删除,就OK了

类找不到异常,依赖问题。

清理本地仓库报错的依赖,重新下载。

idea跑本地数据时,会产生隐藏的crc文件,将该路径下的.xxx.crc文件删掉即可