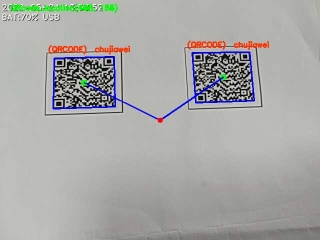

如何根据openCV识别两个二维码从而计算出摄像头到图像中心的距离

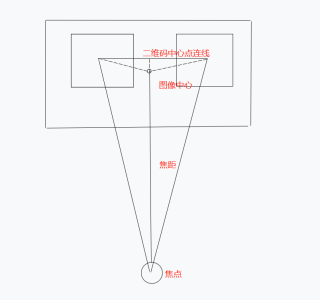

如图一张纸上右两个二维码,如何根据openCV,计算相机镜头到两个二维码中心的距离,并根据镜头距离二维码中心的偏移量和计算所得的深度信息算出偏移角度。

目前可识别出二维码据算出偏移量

1.如果知道相机焦距的话可以这么算

根据两个二维码的轮廓尺寸和成像像素数,然后根据相机的焦距进行计算相机到物体的一个真实距离,该过程涉及到几个坐标系变换。参考一下这个。https://wenku.baidu.com/view/a623c3f487254b35eefdc8d376eeaeaad1f31602.html

使用OpenCV测量图像中物体之间的距离

https://mp.weixin.qq.com/s/XvbkQm6x_zrnT8kU8i0iew

#!/usr/bin/env python

# -*- coding: UTF-8 -*-

# ================================================================

# Copyright (C) 2019 * Ltd. All rights reserved.

# Project :pythonTest

# File name : distance_between.py

# Author : yoyo

# Contact : cs_jxau@163.com

# Created date: 2021-03-08 10:03:26

# Editor : yoyo

# Modify Time : 2021-03-08 10:03:26

# Version : 1.0

# IDE : PyCharm2020

# License : Copyright (C) 2017-2021

# Description : 使用OpenCV测量图像中物体之间的距离

# https://mp.weixin.qq.com/s/XvbkQm6x_zrnT8kU8i0iew

# python distance_between.py --image ./images/example_01.png --width 0.955

# ================================================================

# import the necessary packages

from scipy.spatial import distance as dist

from imutils import perspective

from imutils import contours

import numpy as np

import argparse

import imutils

import cv2

def midpoint(ptA, ptB):

return ((ptA[0] + ptB[0]) * 0.5, (ptA[1] + ptB[1]) * 0.5)

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--image", required=True, help="path to the input image")

ap.add_argument("-w", "--width", type=float, required=True, help="width of the left-most object in the image (in inches)")

args = vars(ap.parse_args())

# load the image, convert it to grayscale, and blur it slightly

image = cv2.imread(args["image"])

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

gray = cv2.GaussianBlur(gray, (7, 7), 0)

# perform edge detection, then perform a dilation + erosion to close gaps in between object edges

edged = cv2.Canny(gray, 50, 100)

edged = cv2.dilate(edged, None, iterations=1)

edged = cv2.erode(edged, None, iterations=1)

# find contours in the edge map

cnts = cv2.findContours(edged.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

cnts = imutils.grab_contours(cnts)

# sort the contours from left-to-right and, then initialize the distance colors and reference object

(cnts, _) = contours.sort_contours(cnts)

colors = ((0, 0, 255), (240, 0, 159), (0, 165, 255), (255, 255, 0), (255, 0, 255))

refObj = None

# loop over the contours individually

for c in cnts:

# if the contour is not sufficiently large, ignore it

if cv2.contourArea(c) < 100: continue

# compute the rotated bounding box of the contour

box = cv2.minAreaRect(c)

box = cv2.cv.BoxPoints(box) if imutils.is_cv2() else cv2.boxPoints(box)

box = np.array(box, dtype="int")

# order the points in the contour such that they appear

# in top-left, top-right, bottom-right, and bottom-left order,

# then draw the outline of the rotated bounding box

box = perspective.order_points(box)

# compute the center of the bounding box

cX = np.average(box[:, 0])

cY = np.average(box[:, 1])

# if this is the first contour we are examining (i.e.,

# the left-most contour), we presume this is the reference object

if refObj is None:

# unpack the ordered bounding box, then compute the

# midpoint between the top-left and top-right points,

# followed by the midpoint between the top-right and

# bottom-right

(tl, tr, br, bl) = box

(tlblX, tlblY) = midpoint(tl, bl)

(trbrX, trbrY) = midpoint(tr, br)

# compute the Euclidean distance between the midpoints,

# then construct the reference object

D = dist.euclidean((tlblX, tlblY), (trbrX, trbrY))

refObj = (box, (cX, cY), D / args["width"])

continue

# draw the contours on the image

orig = image.copy()

cv2.drawContours(orig, [box.astype("int")], -1, (0, 255, 0), 2)

cv2.drawContours(orig, [refObj[0].astype("int")], -1, (0, 255, 0), 2)

# stack the reference coordinates and the object coordinates to include the object center

refCoords = np.vstack([refObj[0], refObj[1]])

objCoords = np.vstack([box, (cX, cY)])

# loop over the original points

for ((xA, yA), (xB, yB), color) in zip(refCoords, objCoords, colors):

# draw circles corresponding to the current points and connect them with a line W

cv2.circle(orig, (int(xA), int(yA)), 5, color, -1)

cv2.circle(orig, (int(xB), int(yB)), 5, color, -1)

cv2.line(orig, (int(xA), int(yA)), (int(xB), int(yB)), color, 2)

# compute the Euclidean distance between the coordinates,

# and then convert the distance in pixels to distance in units

D = dist.euclidean((xA, yA), (xB, yB)) / refObj[2]

(mX, mY) = midpoint((xA, yA), (xB, yB))

cv2.putText(orig, "{:.1f}in".format(D), (int(mX), int(mY - 10)), cv2.FONT_HERSHEY_SIMPLEX, 0.55, color, 2)

# show the output image

cv2.imshow("Image", orig)

cv2.waitKey(0)