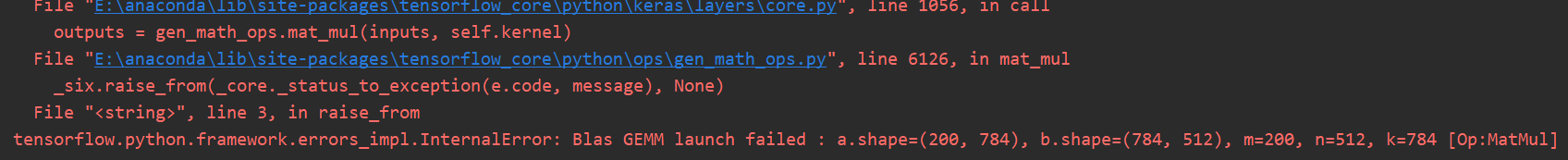

运行tensorflow时出现tensorflow.python.framework.errors_impl.InternalError: Blas GEMM launch failed这个错误

运行tensorflow时出现tensorflow.python.framework.errors_impl.InternalError: Blas GEMM launch failed这个错误,查了一下说是gpu被占用了,从下面这里开始出问题的:

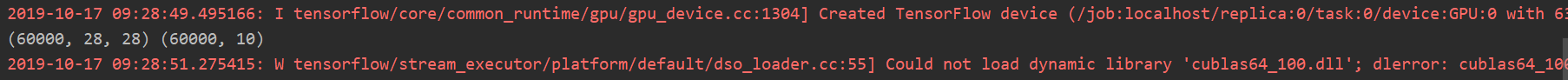

2019-10-17 09:28:49.495166: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1304] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 6382 MB memory) -> physical GPU (device: 0, name: GeForce GTX 1070, pci bus id: 0000:01:00.0, compute capability: 6.1)

(60000, 28, 28) (60000, 10)

2019-10-17 09:28:51.275415: W tensorflow/stream_executor/platform/default/dso_loader.cc:55] Could not load dynamic library 'cublas64_100.dll'; dlerror: cublas64_100.dll not found

最后显示的问题:

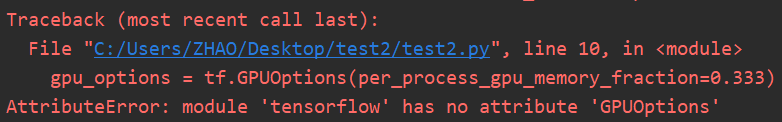

试了一下网上的方法,比如加代码:

gpu_options = tf.GPUOptions(per_process_gpu_memory_fraction=0.333)

sess = tf.Session(config=tf.ConfigProto(gpu_options=gpu_options))

但最后提示:

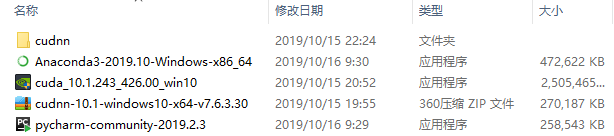

现在不知道要怎么解决了。新手想试下简单的数字识别,步骤也是按教程一步步来的,可能用的版本和教程不一样,我用的是刚下的:2.0tensorflow和以下:

不知道会不会有版本问题,现在紧急求助各位大佬,还有没有其它可以尝试的方法。测试程序加法运算可以执行,数字识别图片运行的时候我看了下,GPU最大占有率才0.2%,下面是完整数字图片识别代码:

import os

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers, optimizers, datasets

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

#gpu_options = tf.GPUOptions(per_process_gpu_memory_fraction=0.2)

#sess = tf.Session(config=tf.ConfigProto(gpu_options=gpu_options))

gpu_options = tf.GPUOptions(per_process_gpu_memory_fraction=0.333)

sess = tf.Session(config=tf.ConfigProto(gpu_options=gpu_options))

(x, y), (x_val, y_val) = datasets.mnist.load_data()

x = tf.convert_to_tensor(x, dtype=tf.float32) / 255.

y = tf.convert_to_tensor(y, dtype=tf.int32)

y = tf.one_hot(y, depth=10)

print(x.shape, y.shape)

train_dataset = tf.data.Dataset.from_tensor_slices((x, y))

train_dataset = train_dataset.batch(200)

model = keras.Sequential([

layers.Dense(512, activation='relu'),

layers.Dense(256, activation='relu'),

layers.Dense(10)])

optimizer = optimizers.SGD(learning_rate=0.001)

def train_epoch(epoch):

# Step4.loop

for step, (x, y) in enumerate(train_dataset):

with tf.GradientTape() as tape:

# [b, 28, 28] => [b, 784]

x = tf.reshape(x, (-1, 28 * 28))

# Step1. compute output

# [b, 784] => [b, 10]

out = model(x)

# Step2. compute loss

loss = tf.reduce_sum(tf.square(out - y)) / x.shape[0]

# Step3. optimize and update w1, w2, w3, b1, b2, b3

grads = tape.gradient(loss, model.trainable_variables)

# w' = w - lr * grad

optimizer.apply_gradients(zip(grads, model.trainable_variables))

if step % 100 == 0:

print(epoch, step, 'loss:', loss.numpy())

def train():

for epoch in range(30):

train_epoch(epoch)

if __name__ == '__main__':

train()

希望能有人给下建议或解决方法,拜谢!

请问这个问题解决了吗

将 这句 gpu_options = tf.GPUOptions(per_process_gpu_memory_fraction=0.333)

改成

tf.config.gpu.set_per_process_memory_fraction(0.333)