请问为什么这段代码只能爬到这个网页的前九个数据,该怎么修改才能爬到全部数据,如果可以的话把数据可视化。

整了好久了,太难受╯﹏╰

网页 https://www.meishij.net/china-food/

import requests

import csv

from lxml import etree

def get_html(page):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Edg/114.0.1823.51'

}

response = requests.get('https://www.meishij.net/china-food/', headers=headers)

return response.text

def parse_html(content):

html = etree.HTML(content)

names = html.xpath('//*[@id="listtyle1_list"]/div/a/div/div/div[1]/strong/text()')

#// *[ @ id = "listtyle1_list"] / div[1] / a / div / div / div[1] / strong

#// *[ @ id = "listtyle1_list"] / div[17] / a / div / div / div[1] / strong

#// *[ @ id = "listtyle1_list"] / div[1] / a / div / div / div[1] / strong

#// *[ @ id = "listtyle1_list"] / div[11] / a / div / div / div[1] / strong

views = html.xpath('//*[@id="listtyle1_list"]/div/a/div/div/div[1]/span/text()')

names1 = html.xpath('//*[@id="listtyle1_list"]/div/a/div/div/div[1]/em/text()')

gongneng= html.xpath('//*[@id="listtyle1_list"]/div/a/strong/span/text()')

images = html.xpath('//*[@id="listtyle1_list"]/div/a/img/@src')

return zip(names, images, views, names1, gongneng)

def save_data(foods):

with open('foods.csv', mode='a', encoding='utf-8-sig', newline='') as stream:

writer = csv.writer(stream)

writer.writerow([

'菜名',

'人气',

'发布者',

'功能',

'图片',

])

for name, image, view, name1, gongneng in foods:

writer.writerow([

name,

view,

name1,

gongneng,

image,

])

if __name__ == '__main__':

for i in range(3):

page = i * 18

content = get_html(page)

foods = parse_html(content)

save_data(foods)

print(f"第{i + 1}页保存成功!")

这太明显了,main入口你这个循环里面去爬取网页每次循环都是爬同一个网址,肯定爬取的都是同一页的数据

因为不是所有的项目都有功能这个字段,所以你取得的数据是10个,刚好就是10个项目有功能字段,所以取消掉功能这个字段就能获取所有了,功能字段用另外的方式获取吧

不知道你这个问题是否已经解决, 如果还没有解决的话:- 这篇博客: 操作系统习题中的 五、存储器管理-分页存储方式:计算物理地址和有效访问时间(EAT) 部分也许能够解决你的问题, 你可以仔细阅读以下内容或者直接跳转源博客中阅读:

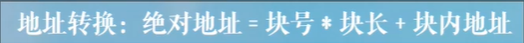

5.1计算物理地址:

在采用页式储存管理的系统中,某进程的逻辑地址空间为4页(每页2048字节)。已知该进程的页面映像表(页表)如下,计算有效逻辑地址4865所对应的物理地址。

求页号:d=4865%2048=2…769 (2为页号,769为块内地址)对页表:即块号为6

计算地址: Physical address=6*2048+769=13057

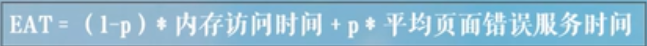

5.2计算有效访问时间(EAT)

p是页面错误率,命中率=1-p。

如果你已经解决了该问题, 非常希望你能够分享一下解决方案, 写成博客, 将相关链接放在评论区, 以帮助更多的人 ^-^