YOLOv5 pyqt5 cv悬赏帖(有偿)

这是一个有关YOLOv5、pyqt5、cv的问题(可能需要pyqt5视频流调试经验、YOLOv5使用经验、cv经验)。

我的需求是:我想当他在检测视频的时候能加入一块用红色线画的检测区域(在播放视频的时候能一直显示出来,形状为矩形),当行人进入检测区域,行人会有红框标出来,同时会有声音报警,比如:危险区域请尽快离开!

下面是我的代码部分:(这个代码运行会产生一个pyqt界面,具体效果见这个视频https://www.bilibili.com/video/BV16v411H731/?spm_id_from=333.788&vd_source=47f93dc32070e09251696ed31473b3cc)

# -*- coding: utf-8 -*-

# Form implementation generated from reading ui file 'project.ui'

#

# Created by: PyQt5 UI code generator 5.15.9

#

# WARNING: Any manual changes made to this file will be lost when pyuic5 is

# run again. Do not edit this file unless you know what you are doing.

import sys

import cv2

import argparse

import random

import torch

import numpy as np

import torch.backends.cudnn as cudnn

from PyQt5 import QtCore, QtGui, QtWidgets

from utils.torch_utils import select_device

from models.experimental import attempt_load

from utils.general import check_img_size, non_max_suppression, scale_coords

from utils.datasets import letterbox

from utils.plots2 import plot_one_box

class Ui_MainWindow(QtWidgets.QMainWindow):

def __init__(self, parent=None):

super(Ui_MainWindow, self).__init__(parent)

self.timer_video = QtCore.QTimer()

self.setupUi(self)

# self.init_logo()

self.init_slots()

self.cap = cv2.VideoCapture()

self.out = None

# self.out = cv2.VideoWriter('prediction.avi', cv2.VideoWriter_fourcc(*'XVID'), 20.0, (640, 480))

parser = argparse.ArgumentParser()

parser.add_argument('--weights', nargs='+', type=str,

default='weights/best.pt', help='model.pt path(s)')

# file/folder, 0 for webcam

parser.add_argument('--source', type=str,

default='data/images', help='source')

parser.add_argument('--img-size', type=int,

default=640, help='inference size (pixels)')

parser.add_argument('--conf-thres', type=float,

default=0.25, help='object confidence threshold')

parser.add_argument('--iou-thres', type=float,

default=0.45, help='IOU threshold for NMS')

parser.add_argument('--device', default='',

help='cuda device, i.e. 0 or 0,1,2,3 or cpu')

parser.add_argument(

'--view-img', action='store_true', help='display results')

parser.add_argument('--save-txt', action='store_true',

help='save results to *.txt')

parser.add_argument('--save-conf', action='store_true',

help='save confidences in --save-txt labels')

parser.add_argument('--nosave', action='store_true',

help='do not save images/videos')

parser.add_argument('--classes', nargs='+', type=int,

help='filter by class: --class 0, or --class 0 2 3')

parser.add_argument(

'--agnostic-nms', action='store_true', help='class-agnostic NMS')

parser.add_argument('--augment', action='store_true',

help='augmented inference')

parser.add_argument('--update', action='store_true',

help='update all models')

parser.add_argument('--project', default='runs/detect',

help='save results to project/name')

parser.add_argument('--name', default='exp',

help='save results to project/name')

parser.add_argument('--exist-ok', action='store_true',

help='existing project/name ok, do not increment')

self.opt = parser.parse_args()

print(self.opt)

source, weights, view_img, save_txt, imgsz = self.opt.source, self.opt.weights, self.opt.view_img, self.opt.save_txt, self.opt.img_size

self.device = select_device(self.opt.device)

self.half = self.device.type != 'cpu' # half precision only supported on CUDA

cudnn.benchmark = True

# Load model

self.model = attempt_load(

weights, self.device) # load FP32 model

stride = int(self.model.stride.max()) # model stride

self.imgsz = check_img_size(imgsz, s=stride) # check img_size

if self.half:

self.model.half() # to FP16

# Get names and colors

self.names = self.model.module.names if hasattr(

self.model, 'module') else self.model.names

self.colors = [[random.randint(0, 255)

for _ in range(3)] for _ in self.names]

def setupUi(self, MainWindow):

MainWindow.setObjectName("MainWindow")

MainWindow.resize(800, 600)

self.centralwidget = QtWidgets.QWidget(MainWindow)

self.centralwidget.setObjectName("centralwidget")

self.horizontalLayout_2 = QtWidgets.QHBoxLayout(self.centralwidget)

self.horizontalLayout_2.setObjectName("horizontalLayout_2")

self.horizontalLayout = QtWidgets.QHBoxLayout()

self.horizontalLayout.setSizeConstraint(QtWidgets.QLayout.SetNoConstraint)

self.horizontalLayout.setObjectName("horizontalLayout")

self.verticalLayout = QtWidgets.QVBoxLayout()

self.verticalLayout.setContentsMargins(-1, -1, 0, -1)

self.verticalLayout.setSpacing(80)

self.verticalLayout.setObjectName("verticalLayout")

self.pushButton_img = QtWidgets.QPushButton(self.centralwidget)

sizePolicy = QtWidgets.QSizePolicy(QtWidgets.QSizePolicy.Minimum, QtWidgets.QSizePolicy.MinimumExpanding)

sizePolicy.setHorizontalStretch(0)

sizePolicy.setVerticalStretch(0)

sizePolicy.setHeightForWidth(self.pushButton_img.sizePolicy().hasHeightForWidth())

self.pushButton_img.setSizePolicy(sizePolicy)

self.pushButton_img.setMinimumSize(QtCore.QSize(150, 100))

self.pushButton_img.setMaximumSize(QtCore.QSize(150, 100))

font = QtGui.QFont()

font.setFamily("Agency FB")

font.setPointSize(12)

self.pushButton_img.setFont(font)

self.pushButton_img.setObjectName("pushButton_img")

self.verticalLayout.addWidget(self.pushButton_img, 0, QtCore.Qt.AlignHCenter)

self.pushButton_camera = QtWidgets.QPushButton(self.centralwidget)

sizePolicy = QtWidgets.QSizePolicy(QtWidgets.QSizePolicy.Minimum, QtWidgets.QSizePolicy.Expanding)

sizePolicy.setHorizontalStretch(0)

sizePolicy.setVerticalStretch(0)

sizePolicy.setHeightForWidth(self.pushButton_camera.sizePolicy().hasHeightForWidth())

self.pushButton_camera.setSizePolicy(sizePolicy)

self.pushButton_camera.setMinimumSize(QtCore.QSize(150, 100))

self.pushButton_camera.setMaximumSize(QtCore.QSize(150, 100))

font = QtGui.QFont()

font.setFamily("Agency FB")

font.setPointSize(12)

self.pushButton_camera.setFont(font)

self.pushButton_camera.setObjectName("pushButton_camera")

self.verticalLayout.addWidget(self.pushButton_camera, 0, QtCore.Qt.AlignHCenter)

self.pushButton_video = QtWidgets.QPushButton(self.centralwidget)

sizePolicy = QtWidgets.QSizePolicy(QtWidgets.QSizePolicy.Minimum, QtWidgets.QSizePolicy.Expanding)

sizePolicy.setHorizontalStretch(0)

sizePolicy.setVerticalStretch(0)

sizePolicy.setHeightForWidth(self.pushButton_video.sizePolicy().hasHeightForWidth())

self.pushButton_video.setSizePolicy(sizePolicy)

self.pushButton_video.setMinimumSize(QtCore.QSize(150, 100))

self.pushButton_video.setMaximumSize(QtCore.QSize(150, 100))

font = QtGui.QFont()

font.setFamily("Agency FB")

font.setPointSize(12)

self.pushButton_video.setFont(font)

self.pushButton_video.setObjectName("pushButton_video")

self.verticalLayout.addWidget(self.pushButton_video, 0, QtCore.Qt.AlignHCenter)

self.verticalLayout.setStretch(2, 1)

self.horizontalLayout.addLayout(self.verticalLayout)

self.label = QtWidgets.QLabel(self.centralwidget)

self.label.setObjectName("label")

self.horizontalLayout.addWidget(self.label)

self.horizontalLayout.setStretch(0, 1)

self.horizontalLayout.setStretch(1, 3)

self.horizontalLayout_2.addLayout(self.horizontalLayout)

MainWindow.setCentralWidget(self.centralwidget)

self.menubar = QtWidgets.QMenuBar(MainWindow)

self.menubar.setGeometry(QtCore.QRect(0, 0, 800, 23))

self.menubar.setObjectName("menubar")

MainWindow.setMenuBar(self.menubar)

self.statusbar = QtWidgets.QStatusBar(MainWindow)

self.statusbar.setObjectName("statusbar")

MainWindow.setStatusBar(self.statusbar)

self.retranslateUi(MainWindow)

QtCore.QMetaObject.connectSlotsByName(MainWindow)

def retranslateUi(self, MainWindow):

_translate = QtCore.QCoreApplication.translate

MainWindow.setWindowTitle(_translate("MainWindow", "区域视频入侵检测"))

self.pushButton_img.setText(_translate("MainWindow", "图片检测"))

self.pushButton_camera.setText(_translate("MainWindow", "摄像头检测"))

self.pushButton_video.setText(_translate("MainWindow", "视频检测"))

self.label.setText(_translate("MainWindow", ""))

def init_slots(self):

self.pushButton_img.clicked.connect(self.button_image_open)

self.pushButton_video.clicked.connect(self.button_video_open)

self.pushButton_camera.clicked.connect(self.button_camera_open)

self.timer_video.timeout.connect(self.show_video_frame)

# def init_logo(self):

# pix = QtGui.QPixmap('wechat.jpg')

# self.label.setScaledContents(True)

# self.label.setPixmap(pix)

def button_image_open(self):

print('button_image_open')

name_list = []

img_name, _ = QtWidgets.QFileDialog.getOpenFileName(

self, "打开图片", "", "*.jpg;;*.png;;All Files(*)")

if not img_name:

return

img = cv2.imread(img_name)

print(img_name)

showimg = img

with torch.no_grad():

img = letterbox(img, new_shape=self.opt.img_size)[0]

# Convert

# BGR to RGB, to 3x416x416

img = img[:, :, ::-1].transpose(2, 0, 1)

img = np.ascontiguousarray(img)

img = torch.from_numpy(img).to(self.device)

img = img.half() if self.half else img.float() # uint8 to fp16/32

img /= 255.0 # 0 - 255 to 0.0 - 1.0

if img.ndimension() == 3:

img = img.unsqueeze(0)

# Inference

pred = self.model(img, augment=self.opt.augment)[0]

# Apply NMS

pred = non_max_suppression(pred, self.opt.conf_thres, self.opt.iou_thres, classes=self.opt.classes,

agnostic=self.opt.agnostic_nms)

print(pred)

# Process detections

for i, det in enumerate(pred):

if det is not None and len(det):

# Rescale boxes from img_size to im0 size

det[:, :4] = scale_coords(

img.shape[2:], det[:, :4], showimg.shape).round()

for *xyxy, conf, cls in reversed(det):

label = '%s %.2f' % (self.names[int(cls)], conf)

name_list.append(self.names[int(cls)])

plot_one_box(xyxy, showimg, label=label,

color=self.colors[int(cls)], line_thickness=2)

cv2.imwrite('prediction.jpg', showimg)

self.result = cv2.cvtColor(showimg, cv2.COLOR_BGR2BGRA)

self.result = cv2.resize(

self.result, (640, 480), interpolation=cv2.INTER_AREA)

self.QtImg = QtGui.QImage(

self.result.data, self.result.shape[1], self.result.shape[0], QtGui.QImage.Format_RGB32)

self.label.setPixmap(QtGui.QPixmap.fromImage(self.QtImg))

def button_video_open(self):

video_name, _ = QtWidgets.QFileDialog.getOpenFileName(

self, "打开视频", "", "*.mp4;;*.avi;;All Files(*)")

if not video_name:

return

flag = self.cap.open(video_name)

if flag == False:

QtWidgets.QMessageBox.warning(

self, u"Warning", u"打开视频失败", buttons=QtWidgets.QMessageBox.Ok, defaultButton=QtWidgets.QMessageBox.Ok)

else:

self.out = cv2.VideoWriter('prediction.avi', cv2.VideoWriter_fourcc(

*'MJPG'), 20, (int(self.cap.get(3)), int(self.cap.get(4))))

self.timer_video.start(30)

self.pushButton_video.setDisabled(True)

self.pushButton_img.setDisabled(True)

self.pushButton_camera.setDisabled(True)

def button_camera_open(self):

if not self.timer_video.isActive():

# 默认使用第一个本地camera

flag = self.cap.open(0)

if flag == False:

QtWidgets.QMessageBox.warning(

self, u"Warning", u"打开摄像头失败", buttons=QtWidgets.QMessageBox.Ok, defaultButton=QtWidgets.QMessageBox.Ok)

else:

self.out = cv2.VideoWriter('prediction.avi', cv2.VideoWriter_fourcc(

*'MJPG'), 20, (int(self.cap.get(3)), int(self.cap.get(4))))

self.timer_video.start(30)

self.pushButton_video.setDisabled(True)

self.pushButton_img.setDisabled(True)

self.pushButton_camera.setText(u"关闭摄像头")

else:

self.timer_video.stop()

self.cap.release()

self.out.release()

self.label.clear()

self.init_logo()

self.pushButton_video.setDisabled(False)

self.pushButton_img.setDisabled(False)

self.pushButton_camera.setText(u"摄像头检测")

def show_video_frame(self):

name_list = []

flag, img = self.cap.read()

if img is not None:

showimg = img

with torch.no_grad():

img = letterbox(img, new_shape=self.opt.img_size)[0]

# Convert

# BGR to RGB, to 3x416x416

img = img[:, :, ::-1].transpose(2, 0, 1)

img = np.ascontiguousarray(img)

img = torch.from_numpy(img).to(self.device)

img = img.half() if self.half else img.float() # uint8 to fp16/32

img /= 255.0 # 0 - 255 to 0.0 - 1.0

if img.ndimension() == 3:

img = img.unsqueeze(0)

# Inference

pred = self.model(img, augment=self.opt.augment)[0]

# Apply NMS

pred = non_max_suppression(pred, self.opt.conf_thres, self.opt.iou_thres, classes=self.opt.classes,

agnostic=self.opt.agnostic_nms)

# Process detections

for i, det in enumerate(pred): # detections per image

if det is not None and len(det):

# Rescale boxes from img_size to im0 size

det[:, :4] = scale_coords(

img.shape[2:], det[:, :4], showimg.shape).round()

# Write results

for *xyxy, conf, cls in reversed(det):

label = '%s %.2f' % (self.names[int(cls)], conf)

name_list.append(self.names[int(cls)])

print(label)

plot_one_box(

xyxy, showimg, label=label, color=self.colors[int(cls)], line_thickness=2)

self.out.write(showimg)

show = cv2.resize(showimg, (640, 480))

self.result = cv2.cvtColor(show, cv2.COLOR_BGR2RGB)

showImage = QtGui.QImage(self.result.data, self.result.shape[1], self.result.shape[0],

QtGui.QImage.Format_RGB888)

self.label.setPixmap(QtGui.QPixmap.fromImage(showImage))

else:

self.timer_video.stop()

self.cap.release()

self.out.release()

self.label.clear()

self.pushButton_video.setDisabled(False)

self.pushButton_img.setDisabled(False)

self.pushButton_camera.setDisabled(False)

self.init_logo()

if __name__ == '__main__':

app = QtWidgets.QApplication(sys.argv)

ui = Ui_MainWindow()

ui.show()

sys.exit(app.exec_())

- 给你找了一篇非常好的博客,你可以看看是否有帮助,链接:yolov5 + pyqt5 口罩识别系统实战 (yolov5、pyqt5 快速入门 ,大作业项目)

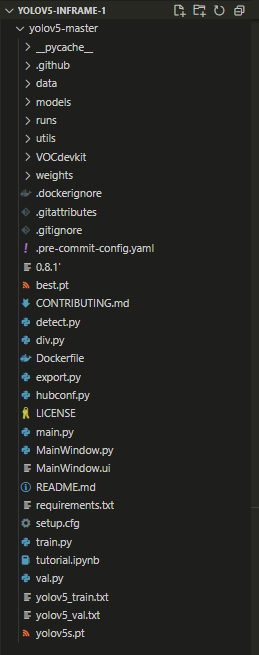

- 除此之外, 这篇博客: 从零开始完成YOLOv5目标识别(三)用PyQt5展示YOLOv5的识别结果中的 一、项目框架: 部分也许能够解决你的问题, 你可以仔细阅读以下内容或跳转源博客中阅读:

其中main.py和MainWindow.py是pyqt5的功能文件。

以下内容部分参考ChatGPT模型:

根据提问者的需求,需要在视频检测的过程中加入一个检测区域,并且当行人进入检测区域时,会有红框标出来,并且会有声音报警。针对这个问题,可以在UI界面上添加一个矩形控件,用于表示检测区域,然后在视频帧上检测行人是否在矩形区域内,并进行相应的处理。

首先,在UI界面上添加一个矩形控件,可以使用Qt中的QGraphicsRectItem类来实现:

from PyQt5.QtGui import QPen, QColor

from PyQt5.QtWidgets import QGraphicsRectItem

class DetectionArea(QGraphicsRectItem):

def __init__(self, rect, parent=None):

super(DetectionArea,

------------------------------------------------------------------------

### 如果我的建议对您有帮助、请点击采纳、祝您生活愉快

https://blog.csdn.net/vleess/article/details/103305559

画框这里有现成的,剩下的就是xyxy和画的框算一下iou,做预警可能需要调用win32api,写文字的话就用cv2.puttext试试看

- PyQt5 部分:这里的代码主要用于显示视频,生成并绘制矩形区域

import sys

import cv2

from PyQt5 import QtWidgets, QtGui, QtCore

class VideoWindow(QtWidgets.QMainWindow):

def __init__(self):

super().__init__()

self.label = QtWidgets.QLabel(self)

self.setCentralWidget(self.label)

# 初始化视频

self.cap = cv2.VideoCapture(0)

self.timer = QtCore.QTimer(self)

self.timer.timeout.connect(self.update_frame)

self.timer.start(30)

# 绘制矩形区域

self.rect = QtCore.QRect(0, 0, 100, 100)

self.pen = QtGui.QPen(QtGui.QColor(255, 0, 0), 3, QtCore.Qt.SolidLine)

def update_frame(self):

ret, frame = self.cap.read()

if ret:

img = QtGui.QImage(frame, *frame.shape[1::-1], QtGui.QImage.Format_RGB888).rgbSwapped()

pixmap = QtGui.QPixmap.fromImage(img)

painter = QtGui.QPainter(pixmap)

painter.setPen(self.pen)

painter.drawRect(self.rect)

self.label.setPixmap(pixmap)

if __name__ == '__main__':

app = QtWidgets.QApplication(sys.argv)

window = VideoWindow()

window.show()

sys.exit(app.exec())

- YOLOv5 部分:这里的代码主要用于行人检测和框选

import cv2

import torch

from yolov5 import YOLOv5

class ObjectDetector:

def __init__(self, model_path):

self.yolo = YOLOv5(model_path)

def detect(self, img):

# 使用 YOLOv5 进行行人检测

bboxes = self.yolo.predict(img)

# 在图像上绘制检测框

for bbox in bboxes:

x1, y1, x2, y2, conf, cls = bbox

cv2.rectangle(img, (x1, y1), (x2, y2), (0, 0, 255), 2)

return img

- 结合 PyQt5 和 YOLOv5 进行视频流检测报警,代码如下:

class VideoWindow(QtWidgets.QMainWindow):

def __init__(self):

super().__init__()

self.label = QtWidgets.QLabel(self)

self.setCentralWidget(self.label)

# 初始化视频

self.cap = cv2.VideoCapture(0)

self.timer = QtCore.QTimer(self)

self.timer.timeout.connect(self.update_frame)

self.timer.start(30)

# 绘制矩形区域

self.rect = QtCore.QRect(0, 0, 100, 100)

self.pen = QtGui.QPen(QtGui.QColor(255, 0, 0), 3, QtCore.Qt.SolidLine)

# 初始化检测器

self.detector = ObjectDetector('yolov5.pt')

# 初始化报警

self.alert_player = QtCore.QMediaPlayer()

self.alert_player.setMedia(QtCore.QUrl.fromLocalFile('alert.mp3'))

def update_frame(self):

ret, frame = self.cap.read()

if ret:

# 进行行人检测

detect_frame = self.detector.detect(frame)

# 判断是否有行人进入检测区域

x1, y1, x2, y2 = self.rect.getRect()

detect_rect = detect_frame[y1:y2, x1:x2]

detect_gray = cv2.cvtColor(detect_rect, cv2.COLOR_BGR2GRAY)

_, detect_binary = cv2.threshold(detect_gray, 10, 255, cv2.THRESH_BINARY_INV)

detect_contours, _ = cv2.findContours(detect_binary, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

for contour in detect_contours:

x, y, w, h = cv2.boundingRect(contour)

if w > 50 and h > 50:

# 播放报警音效

self.alert_player.play()

# 绘制检测框

cv2.rectangle(detect_rect, (x, y), (x + w, y + h), (0, 0, 255), 2)

# 在窗口上显示视频流

img = QtGui.QImage(detect_frame, *detect_frame.shape[1::-1], QtGui.QImage.Format_RGB888).rgbSwapped()

pixmap = QtGui.QPixmap.fromImage(img)

painter = QtGui.QPainter(pixmap)

painter.setPen(self.pen)

painter.drawRect(self.rect)

self.label.setPixmap(pixmap)

需要注意的是,以上代码只是一个简单的示例,可能还需进一步的调试和完善。还需要根据实际需求进行修改和扩展。