请求尽快回复,最好是扣扣回复,打扰你了你了

这个代码跑不通(格式如此,其实只想请你回复博主你好,你那里有HorBlock模块,以及RepLKDeXt模块这两个模块的结构框图吗?,我已经加群了因为时间紧所以就在这里提问了)

import torch

import torch.nn as nn

def get_dwconv(c, k=3, s=True):

if s:

return nn.Sequential(

nn.Conv2d(c, c, k, stride=2, padding=k//2, groups=c, bias=False),

nn.BatchNorm2d(c),

nn.Conv2d(c, c, 1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(c),

nn.ReLU(inplace=True)

)

else:

return nn.Sequential(

nn.Conv2d(c, c, k, stride=1, padding=k//2, groups=c, bias=False),

nn.BatchNorm2d(c),

nn.Conv2d(c, c, 1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(c),

nn.ReLU(inplace=True)

)

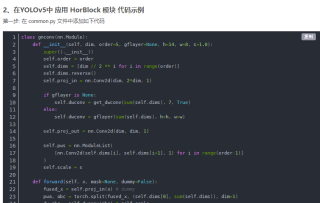

class gnconv(nn.Module):

def __init__(self, dim, order=5, gflayer=None, h=14, w=8, s=1.0):

super(gnconv, self).__init__()

self.order = order

self.dims = [dim // 2 ** i for i in range(order)]

self.dims.reverse()

self.proj_in = nn.Conv2d(dim, 2 * dim, 1)

if gflayer is None:

self.dwconv = get_dwconv(sum(self.dims), 7, True)

else:

self.dwconv = gflayer(sum(self.dims), h=h, w=w)

self.proj_out = nn.Conv2d(dim, dim, 1)

self.pws = nn.ModuleList(

[nn.Conv2d(self.dims[i], self.dims[i+1], 1) for i in range(order-1)]

)

self.scale = s

def forward(self, x, mask=None, dummy=False):

fused_x = self.proj_in(x)

pwa, abc = torch.split(fused_x, (self.dims[0], sum(self.dims)), dim=1)

abc = self.dwconv(abc)

abc = torch.cat(torch.split(abc, self.dims[1:], dim=1), dim=1)

abc = self.proj_out(abc)

x = pwa + self.scale * abc

return x

请复制一下 代码出来,别发图片

参考GPT和自己的思路,你看看我修改的行不行,

import torch

import torch.nn as nn

def get_dwconv(c, k=3, s=True):

if s:

return nn.Sequential(

nn.Conv2d(c, c, k, stride=2, padding=k//2, groups=c, bias=False),

nn.BatchNorm2d(c),

nn.Conv2d(c, c, 1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(c),

nn.ReLU(inplace=True)

)

else:

return nn.Sequential(

nn.Conv2d(c, c, k, stride=1, padding=k//2, groups=c, bias=False),

nn.BatchNorm2d(c),

nn.Conv2d(c, c, 1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(c),

nn.ReLU(inplace=True)

)

class gnconv(nn.Module):

def __init__(self, dim, order=5, gflayer=None, h=14, w=8, s=1.0):

super(gnconv, self).__init__()

self.order = order

self.dims = [dim // 2 ** i for i in range(order)]

self.dims.reverse()

self.proj_in = nn.Conv2d(dim, 2 * dim, 1)

if gflayer is None:

self.dwconv = get_dwconv(sum(self.dims), 7, True)

else:

self.dwconv = gflayer(sum(self.dims), h=h, w=w)

self.proj_out = nn.Conv2d(dim, dim, 1)

self.pws = nn.ModuleList(

[nn.Conv2d(self.dims[i], self.dims[i+1], 1) for i in range(order-1)]

)

self.scale = s

def forward(self, x, mask=None, dummy=False):

fused_x = self.proj_in(x) # dummy

pwa, abc = torch.split(fused_x, (self.dims[0], sum(self.dims)), dim=1)

return pwa, abc