python爬虫,爬取的数据异常,如何解决?

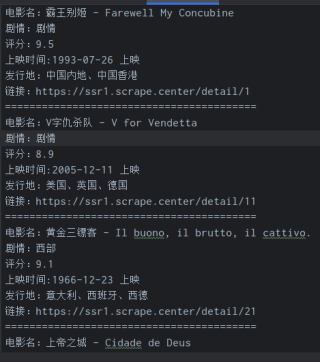

python爬虫,爬取的数据异常,只提取了每一页的第一个内容

import requests

from lxml import etree

def get_data(page):

header = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML,'

' like Gecko) Chrome/109.0.0.0 Safari/537.36'}

res = requests.get('https://ssr1.scrape.center/page/' + str(page), headers=header)

return res.content

def html_data(respon):

html = etree.HTML(respon)

titles = html.xpath('//div[2]/a/h2/text()')

liebies = html.xpath('//div/div/div[2]/div[1]/button/span/text()')

pingfens = html.xpath('//div/div/div[3]/p[1]/text()')

shijians = html.xpath('//div/div[2]/div[3]/span/text()')

lianjies = html.xpath('//div/div/div[2]/a/@href')

chandis = html.xpath('//div/div/div[2]/div[2]/*/text()')

for title, liebie, pingfen, shijian, lianjie, \

chandi in zip(titles, liebies, pingfens, shijians, lianjies, chandis):

pingfen=pingfen.strip()

return f'电影名:{title}\n剧情:{liebie}\n评分:{pingfen}\n上映时间:{shijian}\n发行地:{chandi}\n链接:https://ssr1.scrape.center{lianjie}\n=========================================\n'

def save_data(foods):

f = open('foods.txt', 'a', encoding='utf-8')

f.write(str(foods))

if __name__ == '__main__':

for i in range(1, 11):

page = i

respon = get_data(page)

foods = html_data(respon)

html_data(respon)

save_data(foods)

print(f'---正在保存第{i}页---')

这个问题在于html_data()函数中的return语句放在了for循环内部,这导致函数只能返回第一个电影的信息。把return语句缩进移动到for循环之外,这样才能返回整个页面的电影信息。

另外,save_data()函数中也应该保存字符串而不是字典。

```python

import requests

from lxml import etree

def get_data(page):

header = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML,'

' like Gecko) Chrome/109.0.0.0 Safari/537.36'}

res = requests.get('https://ssr1.scrape.center/page/' + str(page), headers=header)

return res.content

def html_data(respon):

html = etree.HTML(respon)

titles = html.xpath('//div[2]/a/h2/text()')

liebies = html.xpath('//div/div/div[2]/div[1]/button/span/text()')

pingfens = html.xpath('//div/div/div[3]/p[1]/text()')

shijians = html.xpath('//div/div[2]/div[3]/span/text()')

lianjies = html.xpath('//div/div/div[2]/a/@href')

chandis = html.xpath('//div/div/div[2]/div[2]/*/text()')

result = ''

for title, liebie, pingfen, shijian, lianjie, chandi in zip(titles, liebies, pingfens, shijians, lianjies, chandis):

pingfen=pingfen.strip()

result += f'电影名:{title}\n剧情:{liebie}\n评分:{pingfen}\n上映时间:{shijian}\n发行地:{chandi}\n链接:https://ssr1.scrape.center{lianjie}\n=========================================\n'

return result

def save_data(foods):

f = open('foods.txt', 'a', encoding='utf-8')

f.write(foods)

if __name__ == '__main__':

for i in range(1, 11):

page = i

respon = get_data(page)

foods = html_data(respon)

save_data(foods)

print(f'---正在保存第{i}页---')

在上述修改后的代码中,html_data()函数中使用result变量保存电影信息字符串,并在循环结束后返回该字符串。在save_data()函数中,将foods参数写入文件时,直接写入字符串即可。

你要把每页的10条信息串起来,要不return不管放在哪都没用。或者直接保存,不要返回

l=''

for title, liebie, pingfen, shijian, lianjie, \

chandi in zip(titles, liebies, pingfens, shijians, lianjies, chandis):

pingfen=pingfen.strip()

t=f'电影名:{title}\n剧情:{liebie}\n评分:{pingfen}\n上映时间:{shijian}\n发行地:{chandi}\n链接:https://ssr1.scrape.center{lianjie}\n=========================================\n'

l=f'{l}{t}'

return l

#或者

for title, liebie, pingfen, shijian, lianjie, \

chandi in zip(titles, liebies, pingfens, shijians, lianjies, chandis):

pingfen=pingfen.strip()

foods=f'电影名:{title}\n剧情:{liebie}\n评分:{pingfen}\n上映时间:{shijian}\n发行地:{chandi}\n链接:https://ssr1.scrape.center{lianjie}\n=========================================\n'

save_data(foods)

if __name__ == '__main__':

for i in range(1, 11):

respon = get_data(i)

html_data(respon)

print(f'---正在保存第{i}页---')

- 帮你找了个相似的问题, 你可以看下: https://ask.csdn.net/questions/7770894

- 我还给你找了一篇非常好的博客,你可以看看是否有帮助,链接:python中的异常(捕获及处理异常)

- 你还可以看下python参考手册中的 python-具体异常

如果你已经解决了该问题, 非常希望你能够分享一下解决方案, 写成博客, 将相关链接放在评论区, 以帮助更多的人 ^-^