用pytorch做图像的多分类问题报错,可有偿

正在用pytorch做图像的多分类问题,网上的模板是二分类的,然后我想改成多分类的,模型训练没问题,但是到训练过程可视化的时候提示错误,一直过不去,有没有人可以帮忙看看,有偿也ok

现在报错信息是:RuntimeError: CUDA error: device-side assert triggered

CUDA kernel errors might be asynchronously reported at some other API call,so the stacktrace below might be incorrect.

For debugging consider passing CUDA_LAUNCH_BLOCKING=1.

报错这部分的代码如下:

```python

def visualize_model(model, num_images=6):

was_training = model.training

model.eval()

images_handeled = 0

fig = plt.figure()

with torch.no_grad():

for i, (inputs, labels) in enumerate(dataloaders[test_path]):

inputs = inputs.to(device)

labels = labels.to(device)

outputs = model(inputs)

_, preds = torch.max(outputs, 1)

for j in range(inputs.size()[0]):

images_handeled += 1

ax = plt.subplot(num_images//2, 2, images_handeled)

ax.axis('off')

ax.set_title('predicted: {}'.format(class_names[preds[j]]))

imshow(inputs.cpu().data[j])

if images_handeled == num_images:

model.train(mode=was_training)

return

model.train(mode=was_training)

base_model = train_model(resnet50, criterion, optimizer, exp_lr_scheduler, num_epochs=6)

visualize_model(base_model)

plt.show()

报错的具体信息如下:

RuntimeError Traceback (most recent call last)

Input In [56], in | ()

24 return

25 model.train(mode=was_training)

---> 27 base_model = train_model(resnet50, criterion, optimizer, exp_lr_scheduler, num_epochs=6)

28 visualize_model(base_model)

29 plt.show()

Input In [54], in train_model(model, criterion, optimizer, scheduler, num_epochs)

3 def train_model(model, criterion, optimizer, scheduler, num_epochs=20):

4 since = time.time()

----> 6 best_model_wts = copy.deepcopy(model.state_dict())

7 best_acc = 0.0

9 for epoch in range(num_epochs):

File ~\anaconda3\lib\copy.py:172, in deepcopy(x, memo, _nil)

170 y = x

171 else:

--> 172 y = _reconstruct(x, memo, *rv)

174 # If is its own copy, don't memoize.

175 if y is not x:

File ~\anaconda3\lib\copy.py:296, in _reconstruct(x, memo, func, args, state, listiter, dictiter, deepcopy)

294 for key, value in dictiter:

295 key = deepcopy(key, memo)

--> 296 value = deepcopy(value, memo)

297 y[key] = value

298 else:

File ~\anaconda3\lib\copy.py:153, in deepcopy(x, memo, _nil)

151 copier = getattr(x, "__deepcopy__", None)

152 if copier is not None:

--> 153 y = copier(memo)

154 else:

155 reductor = dispatch_table.get(cls)

File ~\anaconda3\lib\site-packages\torch\_tensor.py:134, in Tensor.__deepcopy__(self, memo)

125 raise RuntimeError(

126 "The default implementation of __deepcopy__() for wrapper subclasses "

127 "only works for subclass types that implement clone() and for which "

(...)

131 "different type."

132 )

133 else:

--> 134 new_storage = self.storage().__deepcopy__(memo)

135 if self.is_quantized:

136 # quantizer_params can be different type based on torch attribute

137 quantizer_params: Union[

138 Tuple[torch.qscheme, float, int],

139 Tuple[torch.qscheme, Tensor, Tensor, int],

140 ]

File ~\anaconda3\lib\site-packages\torch\storage.py:597, in TypedStorage.__deepcopy__(self, memo)

596 def __deepcopy__(self, memo):

--> 597 return self._new_wrapped_storage(copy.deepcopy(self._storage, memo))

File ~\anaconda3\lib\copy.py:153, in deepcopy(x, memo, _nil)

151 copier = getattr(x, "__deepcopy__", None)

152 if copier is not None:

--> 153 y = copier(memo)

154 else:

155 reductor = dispatch_table.get(cls)

File ~\anaconda3\lib\site-packages\torch\storage.py:97, in _StorageBase.__deepcopy__(self, memo)

95 if self._cdata in memo:

96 return memo[self._cdata]

---> 97 new_storage = self.clone()

98 memo[self._cdata] = new_storage

99 return new_storage

File ~\anaconda3\lib\site-packages\torch\storage.py:111, in _StorageBase.clone(self)

109 def clone(self):

110 """Returns a copy of this storage"""

--> 111 return type(self)(self.nbytes(), device=self.device).copy_(self)

RuntimeError: CUDA error: device-side assert triggered

CUDA kernel errors might be asynchronously reported at some other API call,so the stacktrace below might be incorrect.

For debugging consider passing CUDA_LAUNCH_BLOCKING=1.

| 属于初学者了,很多错误信息不是很看得懂,我在网上搜了好久,看起来好像是标签还是数据的问题,但是照着改了好多种,都还是不行,不知道是模型的问题还是什么,但是上一步模型训练是没有报错的,这一步模型训练完可视化训练过程才报错,完全一头雾水

如果可以完美解决,私信聊具体价格

你这报错在这里面,跟你贴的代码一点关系没有,都还没执行到这里呢。

看报错信息,应该是你的输出口设置不对,原来两分类是两个,你多分类的话全连接层的输出也要改的。

看起来你在使用 PyTorch 进行模型训练时遇到了运行时错误。这个错误信息提示“device-side assert triggered”,表示在运行时发生了错误,可能是由于驱动程序出现问题或者 GPU 硬件出现问题。

为了解决这个问题,你可以尝试以下几个方法:

检查你的 GPU 驱动程序是否是最新的,并尝试升级驱动程序。

在训练时,尝试设置 CUDA_LAUNCH_BLOCKING=1 环境变量,这会使调试过程更加方便。

尝试在训练模型之前重置 GPU 卡的内存,可以使用 nvidia-smi 命令来实现。

如果你的 GPU 硬件出现问题,请尝试重新启动机器,或者检查你的 GPU 卡是否安装正确。

"CUDA error: device-side assert triggered" 错误通常表示在运行 GPU 代码时发生了内部错误,这可能是由于内存不足或者设备状态错误导致的。

1、确保 GPU 设备有足够的内存来运行代码。

2、可以试试在使用 "CUDA_LAUNCH_BLOCKING=1" 环境变量来让 GPU 代码在执行时暂停,以便在出现错误时更好地调试。

3、可以试试在在运行 GPU 代码之前检查设备状态,确保没有其他错误。例如,可以在代码中添加以下行来检查设备状态:

if not torch.cuda.is_available():

raise Exception("CUDA is not available")

if torch.cuda.device_count() == 0:

raise Exception("No CUDA devices available")

device = torch.device("cuda")

4、可以试试使用 "torch.autograd.profiler" 来分析代码,以了解可能引起内存不足的操作。

望采纳。

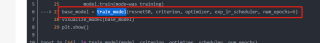

你的程序是在这一步出错的,不是在可视化的过程中

base_model = train_model(resnet50, criterion, optimizer, exp_lr_scheduler, num_epochs=6)

代码中没有关于你所建模型的信息

你可以看一看你的模型,可能是你的模型在改的过程中有的地方没有改,有可能是你分类任务的标签个数不对应造成的

- 这篇文章:pytorch图像分类篇:2.pytorch官方demo实现一个分类器(LeNet) 也许有你想要的答案,你可以看看