如何使用这个python程序

代码如下

import json

import requests

from scrapy.crawler import logger

def get_rbballData(self, dayStart, dayEnd):

"""

爬虫主程序

"""

pageNo = 1

session = self._get_session()

while pageNo <= self.pageNum:

# 循环抓取数据

url = self._get_url(dayStart, dayEnd, pageNo)

res_text = self._send_request(session, url)

if res_text != None:

ret = self._parseText_toData(res_text)

pageNo = pageNo + 1

count = self._save_rbballData()

return count

def _get_url(self, dayStart, dayEnd, pageNo):

"""

构造抓取的url

"""

url = "http://www.cwl.gov.cn/cwl_admin/kjxx/findDrawNotice?name=ssq&issueCount=&issueStart=&issueEnd=&dayStart={}&dayEnd={}&pageNo={}"

url = url.format(dayStart,dayEnd,pageNo)

return url

def _get_session(self):

"""

构造session

"""

headers = {"Accept":"application/json, text/javascript, */*; q=0.01",

"User-Agent":"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.150 Safari/537.36",

"X-Requested-With":"XMLHttpRequest",

"Accept-Encoding":"gzip, deflate",

"Referer": "http://www.cwl.gov.cn/kjxx/ssq/kjgg/",

"Accept-Language":"zh-CN,zh;q=0.9"}

session = requests.Session()

session.headers.update(headers)

return session

def _send_request(self, session, url):

"""

发送请求,并返回响应文本,

return:请求成功返回文本,请求失败,返回None

"""

res = session.get(url)

if res.status_code != 200:

res = None

logger.info("请求url失败,url=[{}]".format(url))

return res.text

def _parseText_toData(self, text):

"""

获取一页双色球开奖结果,

return:获取成功返回,未成功返回-1

"""

try:

text = json.loads(text)

if text["state"] != 0:

# 查询失败

logger.info("查询返回失败,返回结果:[{}]".format(text))

text = None

return -1

except Exception as e:

logger.info("文本转换json失败,文本:【{}】".format(text))

logger.info("异常信息:{}".format(str(e)))

text = None

return -1

#text["result"]本身是个dict,需将其逐个写入rbballData

for t in text["result"]:

self.rbballData.append(t)

self.pageNum = text["pageCount"]

return 0

def _save_rbballData(self):

"""

将抓取到的数据保存到rbball_data.txt文件中

reture: 返回双色球总期数

"""

self.rbballData.reverse()

print(self.rbballData[0]["code"])

count = len(self.rbballData)

if count > 0:

with open("rbball_data.txt", 'w', encoding="utf-8") as f:

f.write(str(self.rbballData))

else:

logger.info("未获取到双色球数据!!!")

return count

我想使用这个代码

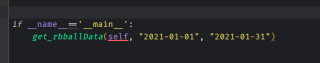

我写了个这个

if __name__=='__main__':

get_rbballData(self, "2021-01-01", "2021-01-31")

但是报错

希望可以帮上你,对你有启发

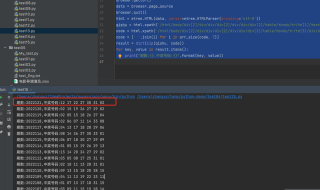

from selenium import webdriver

from lxml import etree

import numpy as np

def arr_size(arr, size):

s = []

for i in range(0, int(len(arr)) + 1, size):

c = arr[i:i + size]

s.append(c)

newlist = [x for x in s if x]

return newlist

browser = webdriver.Chrome() # 这里改成了有界面模式,方便调试代码

url = 'http://www.cwl.gov.cn/ygkj/wqkjgg/'

browser.get(url)

data = browser.page_source

browser.quit()

html = etree.HTML(data, parser=etree.HTMLParser(encoding='utf-8'))

qishu = html.xpath('/html/body/div[2]/div/div/div[2]/div/div/div[2]/table/tbody/tr/td[1]/text()')

code = html.xpath('/html/body/div[2]/div/div/div[2]/div/div/div[2]/table/tbody/tr/td[3]/div/div/text()')

code = [' '.join(i) for i in arr_size(code, 7)]

result = dict(zip(qishu, code))

for key, value in result.items():

print("期数:{},中奖号码:{}".format(key, value))

- 可以查看手册:python-程序框架 中的内容

你这段代码是复制的类里面的代码,直接是不能运行的,要么补全类的定义和类的一些初始化的属性,要么就改为普通的函数,并且补全相关的定义

目标网页都访问不啊,这个爬虫代码已经过时了