爬虫失败,中间获取信息的函数没有运行直接跳过了。

问题遇到的现象和发生背景

爬电影信息,真的试了很多方法都没找到问题出来哪里。

现在不知道为什么完全不允许中间的loading_mv函数,直接跳到下一个环节去了。

现在一个大问题……

我把别人写好的源代码复制运行发现还是没有运行函数,但是没有报错

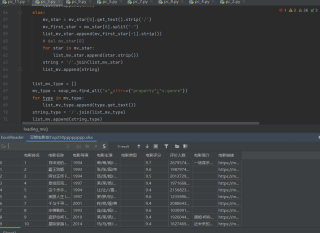

问题相关代码,请勿粘贴截图

from base64 import encode

from dataclasses import replace

from pandas import DataFrame

import requests

from lxml import etree

from bs4 import BeautifulSoup

headers = {

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64)

AppleWebKit/537.36 (KHTML, like Gecko) Chrome/104.0.5112.102 Safari/537.36 Edg/104.0.1293.63"

}

start_num = [i for i in range(0,1,25)]

list_url_mv = []

for start in start_num:

url = "https://movie.douban.com/top250?start={}&filter=%22.format(start)

print("正在处理url:",url)

r = requests.get(url,headers=headers)

soup = BeautifulSoup(r.text,"html.parser")

url_mv_list = soup.select("#content > div > div.article > ol > li > div > div.info > div.hd > a ")

for index_url in range(len(url_mv_list)):

url_mv = url_mv_list[index_url]["href"]

list_url_mv.append(url_mv)

print(url_mv)

def loading_mv(url,number):

list_mv = []

print("-正在处理第{}部电影-".format(number+1))

list_mv.append(number+1)

response_mv = requests.get(url=url,headers=headers)

soup_mv = BeautifulSoup(response_mv.text,"html.parser")

mv_name = soup_mv.find_all('span',attrs={"property":"v:itemreviewed"})

mv_name = mv_name[0].get_text()

list_mv.append(mv_name)

mv_year = soup_mv.select("span.year")

mv_year = mv_year[0].get_text()[1:5]

list_mv.append(mv_year)

list_mv_director = []

mv_director = soup_mv.find_all('a',attrs={'rel':'v:directedBy'})

for director in mv_director:

list_mv_director.append(director.get_text())

string_director = '/'.join(list_mv_director)

list_mv_star = []

mv_star = soup_mv.find_all("a",attrs={"rel":"v:starring"})

if mv_star == []:

list_mv.append(None)

else:

mv_star = mv_star[0].get_text().strip('/')

mv_first_star = mv_star[0].split(":")

list_mv_star.append(mv_first_star[-1].strip())

# del mv_star[0]

for star in mv_star:

list_mv_star.append(star.strip())

string = '/'.join(list_mv_star)

list_mv.append(string)

list_mv_type = []

mv_type = soup_mv.find_all("a",attrs={"property":"v:genre"})

for type in mv_type:

list_mv_type.append(type.get_text())

string_type = '/'.join(list_mv_type)

list_mv.append(string_type)

mv_score = soup_mv.select("strong.ll.rating_num")

mv_score = mv_score[0].get_text()

list_mv.append(mv_score)

mv_evaluation = soup_mv.select("a.rating_people")

mv_evaluation = mv_evaluation[0].get_text().strip()

list_mv.append(mv_evaluation)

mv_plot = soup_mv.find_all("span",attrs={"class":"all hidden"})

if mv_plot == []:

list_mv.append(None)

else:

string_plot = mv_plot[0].get_text().strip().split()

new_string_plot = ' '.join(string_plot)

list_mv.append(new_string_plot)

list_mv.append(url)

return list_mv

list_all_mv = []

dict_mv_info = {}

for number in range(len(list_url_mv)):

mv_info = loading_mv(list_url_mv[number],number)

list_all_mv.append(mv_info)

print("-运行结束-")

pd = DataFrame(list_all_mv,columns=['电影排名','电影名称','电影导演','电影主演','电影类型','电影评分','评价人数','电影简介','电影链接'])

pd.to_excel(r'D:\Python\Python爬虫\豆瓣电影信息爬取\豆瓣电影前Top250.xlsx')

运行结果及报错内容

输出了空的表格

我看也没跳过loading_mv函数,该函数有运行,帮你改好了

from base64 import encode

from dataclasses import replace

from pandas import DataFrame

import requests

from lxml import etree

from bs4 import BeautifulSoup

headers = {

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64)AppleWebKit/537.36 (KHTML, like Gecko) Chrome/104.0.5112.102 Safari/537.36 Edg/104.0.1293.63"

}

start_num = [i for i in range(0,1,25)]

list_url_mv = []

for start in start_num:

url = "https://movie.douban.com/top250?start={}&filter=%22.format(start)"

print("正在处理url:",url)

r = requests.get(url,headers=headers)

soup = BeautifulSoup(r.text,"html.parser")

url_mv_list = soup.select("#content > div > div.article > ol > li > div > div.info > div.hd > a ")

# print(url_mv_list)

for index_url in range(len(url_mv_list)):

url_mv = url_mv_list[index_url]["href"]

list_url_mv.append(url_mv)

print(url_mv)

def loading_mv(url,number):

list_mv = []

print("-正在处理第{}部电影-".format(number+1))

list_mv.append(number+1)

response_mv = requests.get(url=url,headers=headers)

soup_mv = BeautifulSoup(response_mv.text,"html.parser")

mv_name = soup_mv.find_all('span',attrs={"property":"v:itemreviewed"})

mv_name = mv_name[0].get_text()

list_mv.append(mv_name)

mv_year = soup_mv.select("span.year")

mv_year = mv_year[0].get_text()[1:5]

list_mv.append(mv_year)

list_mv_director = []

mv_director = soup_mv.find_all('a',attrs={'rel':'v:directedBy'})

for director in mv_director:

list_mv_director.append(director.get_text())

string_director = '/'.join(list_mv_director)

list_mv_star = []

mv_star = soup_mv.find_all("a",attrs={"rel":"v:starring"})

if mv_star == []:

list_mv.append(None)

else:

mv_star = mv_star[0].get_text().strip('/')

mv_first_star = mv_star[0].split(":")

list_mv_star.append(mv_first_star[-1].strip())

# del mv_star[0]

for star in mv_star:

list_mv_star.append(star.strip())

string = '/'.join(list_mv_star)

list_mv.append(string)

list_mv_type = []

mv_type = soup_mv.find_all("a",attrs={"property":"v:genre"})

for type in mv_type:

list_mv_type.append(type.get_text())

string_type = '/'.join(list_mv_type)

list_mv.append(string_type)

mv_score = soup_mv.select("strong.ll.rating_num")

mv_score = mv_score[0].get_text()

list_mv.append(mv_score)

mv_evaluation = soup_mv.select("a.rating_people")

mv_evaluation = mv_evaluation[0].get_text().strip()

list_mv.append(mv_evaluation)

mv_plot = soup_mv.find_all("span",attrs={"class":"all hidden"})

if mv_plot == []:

list_mv.append(None)

else:

string_plot = mv_plot[0].get_text().strip().split()

new_string_plot = ' '.join(string_plot)

list_mv.append(new_string_plot)

list_mv.append(url)

return list_mv

list_all_mv = []

dict_mv_info = {}

for number in range(len(list_url_mv)):

mv_info = loading_mv(list_url_mv[number],number)

list_all_mv.append(mv_info)

print("-运行结束-")

pd = DataFrame(list_all_mv,columns=['电影排名','电影名称','电影导演','电影主演','电影类型','电影评分','评价人数','电影简介','电影链接'])

pd.to_excel(r'豆瓣电影前Top250pppppppp.xlsx')