修改 filebeat上报的名称

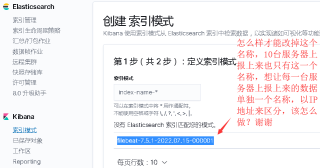

elasticsearch:7.5.1

kibana:7.5.1

filebeat:7.5.1

cat filebeat.yml |grep -v "#" |grep -v "^$"

logging.level: info

logging.to_files: true

logging.files:

path: /var/log/filebeat

name: filebeat

keepfiles: 7

permissions: 0644

filebeat.config:

modules:

path: "/opt/filebeat/modules.d/*.yml"

reload.enabled: true

processors:

- add_cloud_metadata: ~

- add_docker_metadata:

host: "unix:///var/run/docker.sock"

filebeat.autodiscover:

providers:

- type: docker

hints.enabled: true

hints.default_config:

type: container

paths:

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/lib/docker/containers/*/*.log

json.keys_under_root: true

json.add_error_key: true

json.message_key: log

tail_files: true

- type: log

enabled: true

paths:

- /opt/nginx/logs/*.log

output.elasticsearch:

hosts: '192.168.15.78:9200'

username: 'elastic'

password: 'im888'

indices:

- index: "192.168.15.63_nginx-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

stream: "nginx.host"

setup.kibana:

host: "192.168.15.78:5601"

setup.template.name: "docker"

setup.template.pattern: "docker_*"

setup.template.enabled: false

setup.template.overwrite: true

谢谢各位

- 每个filebeat单独配置不同的index名称即可

- 这样每台机器都会写入不同的索引

如有帮助,请采纳,十分感谢!

配置中增加name这个字段为本机IP,用于logstash里面将这个值赋给一些拿不到主机IP的数据

name: 10.x.x.x

############################# input #########################################

filebeat.prospectors:

# 采集系统日志

- input_type: log

paths: /var/log/messages

paths: /var/log/cron

document_type: "messages"

# 采集MySQL慢日志,这里用到了多行模式

- input_type: log

paths: /data/mysql/data/slow.log

document_type: mysql_slow_log

multiline:

pattern: "^# User@Host: "

negate: true

what: "previous"

charset: "ISO-8859-1"

match: after

# 采集web日志

- input_type: log

paths: /data/wwwlogs/access_*.log

document_type: "web_access_log"

# 规避数据热点的优化参数:

# 积累1024条消息才上报

spool_size: 1024

# 或者空闲5s上报

idle_timeout: "5s"

#这里配置下name这个字段为本机IP,用于logstash里面将这个值赋给一些拿不到主机IP的数据

name: 10.x.x.x

############################# Kafka #########################################

output.kafka:

# initial brokers for reading cluster metadata

hosts: ["x.x.x.1:9092","x.x.x.2:9092","x.x.x.3:9092"]

# message topic selection + partitioning

topic: '%{[type]}'

flush_interval: 1s

partition.round_robin:

reachable_only: false

required_acks: 1

compression: gzip

max_message_bytes: 1000000

############################# Logging #########################################

logging.level: info

logging.to_files: true

logging.to_syslog: false

logging.files:

path: /data/filebeat/logs

name: filebeat.log

keepfiles: 7

https://blog.csdn.net/weixin_52270081/article/details/121998840

节点

filebeat版本6.0.0

编辑 filebeat.yml

添加修改如下:

#-------------------------- Elasticsearch output ------------------------------

setup.template.name: "btcfile" //修改成自己的名字,名字必须小写,大写报错

setup.template.pattern: "btcfile-*"

output.elasticsearch:

# Array of hosts to connect to.

hosts: ["192.168.1.2:9200"]

index: "btcfile-%{+yyyy.MM.dd}" //索引

手动加载模块,先关闭logstash,然后指定已elastic输出方式。

filebeat setup --template -E output.logstash.enabled=false -E 'output.elasticsearch.hosts=["192.168.1.2:9200"]'

重启filebeat systemctl restart filebeat

主节点测试:

~]$ curl '192.168.1.3:9200/_cat/indices?v'

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open btcfile-2019.01.30 oYsEaZXQAO2Q7yLPFx03brKjg 3 1 214 0 115.9kb 49.4kb

green open .kibana VTfHCbEuQACAgdUSO5GsO8FZQ 1 1 3 1 47.5kb 23.7kb

green open system-syslog-2019.01 zflaFValeMQ6KN8PrKvjbWVPw 5 1 25577 0 32.1mb 15.9mb

成功。

filebeat.prospectors:

采集系统日志

input_type: log

paths: /var/log/messages

paths: /var/log/cron

document_type: "messages"

采集MySQL慢日志,这里用到了多行模式

input_type: log

paths: /data/mysql/data/slow.log

document_type: mysql_slow_log

multiline:

pattern: "^# User@Host: "

negate: true

what: "previous"

charset: "ISO-8859-1"

match: after

采集web日志

input_type: log

paths: /data/wwwlogs/access_*.log

document_type: "web_access_log"

规避数据热点的优化参数:

积累1024条消息才上报

spool_size: 1024

或者空闲5s上报

idle_timeout: "5s"

#这里配置下name这个字段为本机IP,用于logstash里面将这个值赋给一些拿不到主机IP的数据

name: 10.x.x.x

############################# Kafka #########################################

output.kafka:

initial brokers for reading cluster metadata

hosts: ["x.x.x.1:9092","x.x.x.2:9092","x.x.x.3:9092"]

message topic selection + partitioning

topic: '%{[type]}'

flush_interval: 1s

partition.round_robin:

reachable_only: false

required_acks: 1

compression: gzip

max_message_bytes: 1000000

############################# Logging #########################################

logging.level: info

logging.to_files: true

logging.to_syslog: false

logging.files:

path: /data/filebeat/logs

name: filebeat.log

keepfiles: 7

M1:

在filebeat.yml文件中,配置setup.template.name和 setup.template.pattern选项以匹配新名称。sudo vim /etc/filebeat/filebeat.yml

可以参考官方文档:https://www.elastic.co/guide/en/beats/filebeat/current/change-index-name.html

M2:

索引生命周期管理(ILM)默认是开启状态,在不关闭ILM情况下更改索引名称。在配置文件filebeat.yml添加

setup.ilm.enabled: auto

setup.ilm.rollover_alias: "elk-file"

setup.ilm.pattern: "{now/d}-000001"

参考:https://www.elastic.co/guide/en/beats/filebeat/current/ilm.html

以上操作需要重启,然后可以查看效果。

如有帮助,请采纳,十分感谢!