用nltk去停用词如何分行?(语言-python)

想用nltk库进行数据的预处理,发现数据在分词完成时还是能正常按各条数据分行的,但去停用词后全都合成了一行,这个该怎么解决呢?

问题部分代码如下,都是从论坛找的,能正确运行:

(前面部分清理得到数据t)

#分词并删除停用词

stop_words = set(stopwords.words('english'))

word_tokens = word_tokenize(t)

filtered_sentence = [w for w in word_tokens if not w in stop_words]

print(filtered_sentence)

原始数据为:

能分条

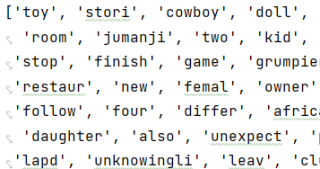

去停用词后:

全合成一行

可以用for循环写成嵌套列表进行处理,示例如下,获取按行去除停用词的分词结果,并可以直接写入csv或者excel中:

from nltk.corpus import stopwords

from nltk.tokenize import word_tokenize

import pandas as pd

example_sent = """This is a sample sentence,showing off the stop words filtration.\n Hello guys!"""

stop_words = set(stopwords.words('english'))

word_tokens = [word_tokenize(x) for x in example_sent.split('\n')]

filtered_sentence = []

for wd in word_tokens:

cent=[]

for w in wd:

if w not in stop_words:

cent.append(w)

filtered_sentence.append(cent)

print(word_tokens)

print(filtered_sentence)

df=pd.DataFrame(filtered_sentence)

print(df)

运行结果:

0 1 2 3 4 5 6 7 8

0 This sample sentence , showing stop words filtration .

1 Hello guys ! None None None None None None

如有帮助和启发,请点采纳。

您好,我是有问必答小助手,您的问题已经有小伙伴帮您解答,感谢您对有问必答的支持与关注!PS:问答VIP年卡 【限时加赠:IT技术图书免费领】,了解详情>>> https://vip.csdn.net/askvip?utm_source=1146287632