调用CUDA API使用GPU运算一个JuliaSet花费的时间比在CPU上多

我用的是《GPU高性能编程CUDA实战》第四章最后的一个例子,在CPU上花费的时间700+ms,在GPU上花费的时间是800+ms

// CPU版本代码

void kernel(unsigned char * ptr) {

for (size_t y = 0; y < DIM; y++) for (size_t x = 0; x < DIM; x++)

{

int offset = x + y*DIM;

int juliaValue = julia(x, y);

ptr[offset * 4 + 0] = 255 * juliaValue;

ptr[offset * 4 + 1] = 0;

ptr[offset * 4 + 2] = 0;

ptr[offset * 4 + 3] = 255;

}

}

int main(void) {

clock_t start, finish;

double totaltime;

start = clock();

CPUBitmap bitmap(DIM, DIM);

unsigned char *ptr = bitmap.get_ptr();

kernel(ptr);

finish = clock();

totaltime = (double)(finish - start) / CLOCKS_PER_SEC;

printf("time : %fs\n", float(totaltime));

bitmap.display_and_exit();

return 0;

}

// GPU版本代码

#define DIM 1000

struct cuComplex

{

float r;

float i;

__device__ cuComplex(float a, float b) : r(a), i(b) {}

__device__ float magnitude2(void) { return r * r + i * i; }

__device__ cuComplex operator*(const cuComplex& a) { return cuComplex(r*a.r - i*a.i, i*a.r + r*a.i); }

__device__ cuComplex operator+(const cuComplex& a) { return cuComplex(r + a.r, i + a.i); }

};

__device__ int julia(int x, int y) {

const float scale = 1.5;

float jx = scale * (float)(DIM / 2 - x) / (DIM / 2);

float jy = scale * (float)(DIM / 2 - y) / (DIM / 2);

cuComplex c(-0.8, 0.156);

cuComplex a(jx, jy);

for (size_t i = 0; i < 200; i++)

{

a = a*a + c;

if (a.magnitude2() > 1000) return 0;

}

return 1;

}

__global__ void kernel(unsigned char * ptr) {

// 将threadIdx/BlockIdx映射到像素位置

int x = blockIdx.x;

int y = blockIdx.y;

int offset = x + y*gridDim.x;

// 计算对应位置上的值

int juliaValue = julia(x, y);

ptr[offset * 4 + 0] = 255 * juliaValue;

ptr[offset * 4 + 1] = 0;

ptr[offset * 4 + 2] = 0;

ptr[offset * 4 + 3] = 255;

}

int main(void) {

clock_t start, finish;

double totaltime;

start = clock();

CPUBitmap bitmap(DIM, DIM);

unsigned char *dev_bitmap;

cudaMalloc((void **)&dev_bitmap, bitmap.image_size());

dim3 grid(DIM, DIM);

kernel<< <grid, 1 >> >(dev_bitmap);

cudaMemcpy(bitmap.get_ptr(), dev_bitmap, bitmap.image_size(), cudaMemcpyDeviceToHost);

cudaFree(dev_bitmap);

finish = clock();

totaltime = (double)(finish - start) / CLOCKS_PER_SEC;

printf("time : %fs\n", float(totaltime));

bitmap.display_and_exit();

return 0;

}

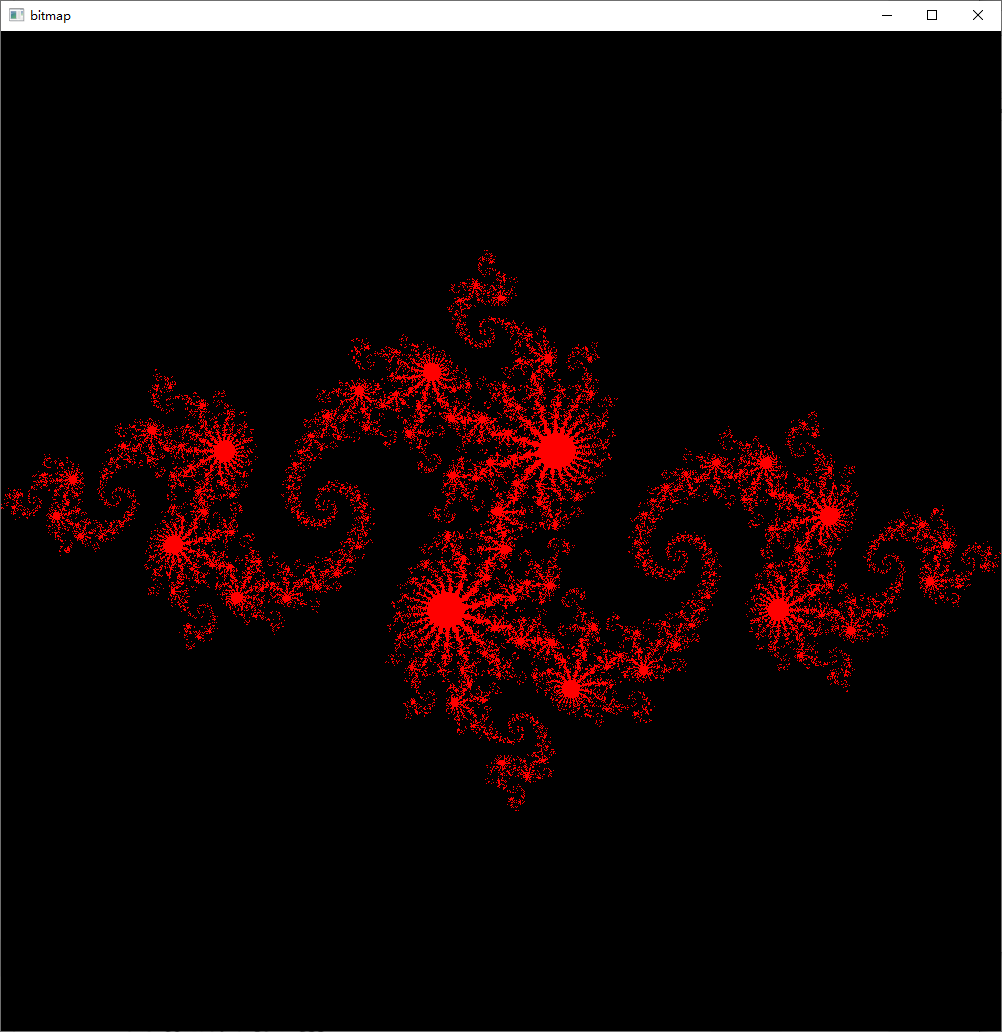

运行结果是这张图片

熟悉CUDA编程的大神能否解释一下为什么使用GPU并行计算花费的时间反而跟多?

配置是i7-8700 + GTX1060 6G

julia是哪里的函数,对于gpu来说,调用函数开销很大,如果是调用主机上的函数,开销更大。