请教一下各位,Scala项目测试模块一直报构造函数异常,代码没有问题,还会有什么原因

trait TestBase extends TestCase with Logging {

// 创建JavaSparkContext上下文

val appCtx = new JavaSparkContext(new SparkContext(new SparkConf().setAppName("test").setMaster("local")))

val sc = appCtx.sc

// val path = getClass.getResource("/").getPath.replace("bin/", "resources/")

val path = "C:\\xiangmu\\out\\production\\ScalaTest1"

Const.ROOT_PATH = if(path.endsWith("/")) path.substring(0, path.length -1) else path

Const.IS_DEBUG = true

// 默认的请求参数

val argument = new Argument(

"token",

"cn",

"sdr_XXX_circle",

"201810100000_201810110000",

Map[String, Array[String]](),

Map("cpu" -> "30", "mem" -> "10000"),

Map("batchno" -> "20000"))

val execArgs = new ExecArgument(

"sdr_XXX_circle",

"201810100000_201810110000",

Map[String, Array[String]]()

)

// 隐式的将Argument转换为String

implicit def argument2String(arg: Argument) = {

Console println arg.toString

arg.toString

}

// 隐式的将Argument转换为String

implicit def execArgument2String(arg: ExecArgument) = {

Console println arg.toString

arg.toString

}

/**

* 获取输入数据时间段

* @param year

* @param month

* @param day

*/

def getPeriodTime(year: Int, month: Int, day: Int) = {

val sdf = new SimpleDateFormat("yyyyMMddHHmm")

val begin = Calendar.getInstance()

begin.set(year, month - 1, day, 0, 0, 0)

val beginStr = sdf.format(begin.getTime)

begin.add(Calendar.DAY_OF_MONTH, 1)

val endStr = sdf.format(begin.getTime)

s"${beginStr}_${endStr}"

}

/**

* 获取测试数据完整路径

* @param fileName

*/

def travelPath(fileName: String): Array[String] = {

travelPathInner(s"${Const.CALC_INPUT_PATH}\\cn\\${fileName}").map(path => path.replace("\\", "/"))

}

private def travelPathInner(filePath: String): Array[String] = {

val file = new File(filePath)

if (file.exists()) {

if (file.isDirectory) {

val files = file.listFiles().map(_.getCanonicalPath).filter(_.endsWith(".dat"))

files ++ file.listFiles().map(_.getCanonicalPath).filter(!_.endsWith(".dat")).flatMap(path => travelPathInner(path))

} else {

Array(file.getCanonicalPath).filter(_.endsWith(".dat"))

}

} else {

Array[String]()

}

}

/**

* 获取注入任务执行结果

* @param job

* @return

*/

def getResult(job: IInjectedJob) = {

val map = job.process(null, null)

map.get("result").toBoolean

}

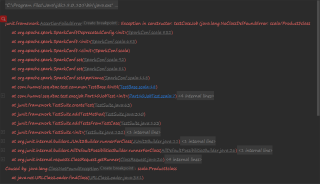

具体原因现在发现是val appCtx = new JavaSparkContext(new SparkContext(new SparkConf().setAppName("test").setMaster("local")))失败,创建JavaSparkContext上下文环境初始化失败

你好,我是有问必答小助手,非常抱歉,本次您提出的有问必答问题,技术专家团超时未为您做出解答

本次提问扣除的有问必答次数,将会以问答VIP体验卡(1次有问必答机会、商城购买实体图书享受95折优惠)的形式为您补发到账户。

因为有问必答VIP体验卡有效期仅有1天,您在需要使用的时候【私信】联系我,我会为您补发。