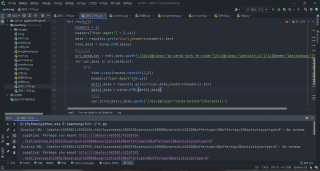

python爬虫,请求不报错也不出现想要获取的信息

这是什么错误啊,为啥网上找不到这类型的问题,好多次都是这样的问题,网上还找不到,希望给俺细说一下下

在url_dataList中获取的url有的不是网址的完整形式,只是相对路径 ,需要进行拼接,类似于base_url+rel_url

import random

import time

import requests

from lxml import etree

import pymongo

#添加用户代理池

def R_ua():

uapools = [

"Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:93.0) Gecko/20100101 Firefox/93.0",

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.81 Safari/537.36",

"Mozilla/5.0 (Windows NT 10.0; WOW64; Trident/7.0; rv:11.0) like Gecko"

]

#取ua值

r_ua = random.choice(uapools)

return r_ua

def req(url):

headers = {}

headers["User-Agent"] = R_ua()

data = requests.get(url=url,headers=headers).text

html_data = etree.HTML(data)

#进入页面

url_dataList = html_data.xpath('//div[@class="tp-cards-tofu fn-clear"]/ul[@class="viewlist_ul"]/li[@name="lazyloadcpc"]/a/@href')

for car_data in url_dataList:

try:

time.sleep(random.randint(1,5))

headers["User-Agent"]=R_ua()

detil_data = requests.get(url=car_data,headers=headers).text

detil_data = etree.HTML(detil_data)

#标题

car_title=detil_data.xpath('//div[@class="cards-bottom"]/h4/text()')

data={

"标题":car_title

}

print(data)

except Exception as e:

print(e)

if name == 'main':

url="https://www.che168.com/zhengzhou/b/#pvareaid=100649#filter"

req(url)

这是源代码