python网易云爬虫爬取id和标题乱码

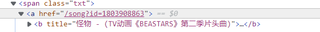

代码中是这样的:

但我用requests爬取,bs解析,结果出来却是乱码['${x.id}', '${x.name|escape}{if alia} - (${alia|escape}){/if}']

import requests

from lxml import etree

hotsongname=[]

hotsongid=[]

head={

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/85.0.4183.83 Safari/537.36'

}

respone=requests.get(url,headers=head)

html=etree.HTML(respone.text)

id_list=html.xpath('//a[contains(@href,"song?")]')

id_list=id_list[0:-11]

for id in id_list:

href=id.xpath('./@href')[0]

song_id=href.split('=')[1]

hotsongid.append(song_id)

song_name=id.xpath('./text()')[0]

hotsongname.append(song_name)

songandsinger=dict(zip(hotsongname,hotsongid))

print(songandsinger)

望采纳