怎么提取里面的文字?

用正则表达式取出字符串中{。。。。。。。。。}的内容,

再用json.loads()转成字典,就可以按照字典对象的方式提取字典中的键值了

例子

import re

import json

s = 'window._appState = {"aaa":"文字1","bbb":"文字2"};'

s = re.findall(r'\{.+\}', s, re.S)

dic = json.loads(s[0])

for k,v in dic.items():

print(k,v)

使用split方法切割,然后对第二部分直接eval,就得到一个词典对象了,你按照词典操作直接取相关的数据即可

假设变量 a 的值是上边那一串

b = a.split('=',1)

c = eval(b[1])先用正则提取大括号中所有内容,再用json.loads()将json字串转换成python中的字典dic,然后就可以用遍历dic['tittle']的方式获取文本信息。

如有帮助,请点采纳。

import requests

import re

import chardet

from lxml import etree

from bs4 import BeautifulSoup

import time

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

import pymysql

start_url = 'https://km.meituan.com/meishi/c36b4055/'

headers = {'User-Agent' : 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.114 Safari/537.36'}

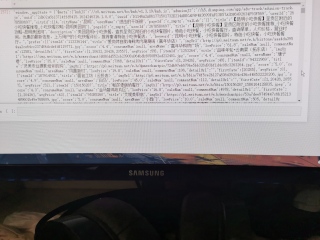

cookie_str ='_lxsdk_cuid=17a22a5eecfc8-0ff130624eed26-4373266-1fa400-17a22a5eed0c8; mtcdn=K; lsu=; ci=114; rvct=114%2C1; _hc.v=fde5828f-834b-1a88-4542-8c8d1b934265.1624081924; _lx_utm=utm_source%3DBaidu%26utm_medium%3Dorganic; iuuid=D1146A2A861771501732E214ABECAD9F4E3699FAF13B57A3DB6492634F93F569; cityname=%E6%98%86%E6%98%8E; _lxsdk=D1146A2A861771501732E214ABECAD9F4E3699FAF13B57A3DB6492634F93F569; uuid=b1b7ca10c17b4c05846f.1624361541.1.0.0; IJSESSIONID=node01c62oeffa13w3urj9c045ndvr14115076; __mta=119886263.1624079382462.1624361542705.1624361560228.14; client-id=e303e794-ce60-4ef3-8231-3fabdce088d1; u=2678580697; n=%E8%BF%9B%E5%87%BB%E7%9A%84%E5%B9%B2%E9%A5%AD%E9%93%B6; lt=q72BgnEBFAE0om0hUw1IbEPNSbwAAAAAzg0AACoe5VD25GnJr-pDIVccXz8NmGQZk2jFZ-YUehjTiPCWUmmtOImQaWHbYud6keortg; mt_c_token=q72BgnEBFAE0om0hUw1IbEPNSbwAAAAAzg0AACoe5VD25GnJr-pDIVccXz8NmGQZk2jFZ-YUehjTiPCWUmmtOImQaWHbYud6keortg; token=q72BgnEBFAE0om0hUw1IbEPNSbwAAAAAzg0AACoe5VD25GnJr-pDIVccXz8NmGQZk2jFZ-YUehjTiPCWUmmtOImQaWHbYud6keortg; token2=q72BgnEBFAE0om0hUw1IbEPNSbwAAAAAzg0AACoe5VD25GnJr-pDIVccXz8NmGQZk2jFZ-YUehjTiPCWUmmtOImQaWHbYud6keortg; unc=%E8%BF%9B%E5%87%BB%E7%9A%84%E5%B9%B2%E9%A5%AD%E9%93%B6; firstTime=1624361585412; _lxsdk_s=17a337e2398-cda-626-ece%7C%7C10'

cookies={}

for line in cookie_str.split(';'):

key,value=line.split('=',1)

cookies[key]=value

R=requests.get(start_url,cookies=cookies,headers=headers)

R.encoding=chardet.detect(r.content)['encoding']

Html=R.content.decode('utf-8')

soup=BeautifulSoup(Html,"lxml")

print(soup.prettify())

b=[i.string for i in soup.find_all('script')]

c=b[14]

c