小白求助:请问怎么爬取img标签下的src地址?

https://www.gooood.cn/sl_release-apartment-by-pascali-semerdjian-arquitetos.htm

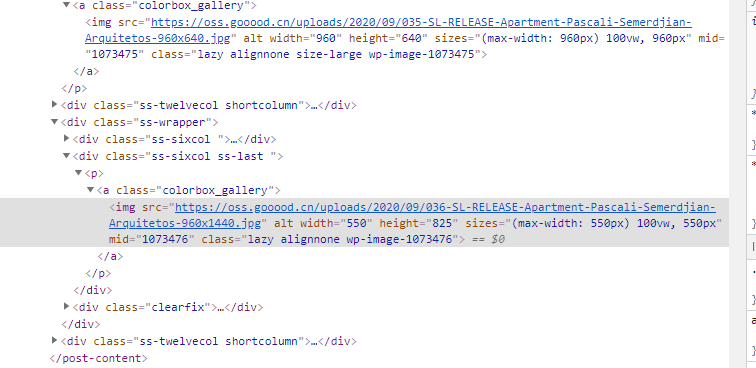

img这个标签好像没有独特的元素去定位,比如每张图片的img标签下的class属性值都不同,只会用find_all('a', class_='colorbox_gallery')到,要取出里面img里的src就没招了,求指导

然后,下载图片的过程中,有时候会遇到UnicodeEncodeError: 'gbk' codec can't encode character '\u0131' in position 7: illegal multibyte sequence

图片下载就中断了,有解决的办法吗?

import requests

from lxml import etree

url = "https://www.gooood.cn/sl_release-apartment-by-pascali-semerdjian-arquitetos.htm"

r = requests.get(url)

print(r.content.decode())

html = etree.HTML(r.content.decode())

imgs = html.xpath("//img//@src")

n = 1

for i in imgs:

print(i)

response = requests.get(i)

img = response.content

with open("./imags/{}.jpg".format(n),"wb") as f: #需要在当前目录下建立imags文件夹

f.write(img)

n += 1

#亲测运行正常

UnicodeEncodeError:这个是网页的编码和你指定的不同,这个网站我打开看了下

<meta charset="utf-8">

是utf8的,你用utf8来decode,而不是 gbk

至于没有办法定位,你可以f12以后找到,复制生成的xpath,照着写

import urllib.request

from bs4 import BeautifulSoup

headers = {"User-Agent":"Mozilla/5.0 (Windows NT 6.3; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/59.0.3071.86 Safari/537.36"}

url="URL地址"

req=urllib.request.Request(url, headers= headers)

data=urllib.request.urlopen(req).read()

soup = BeautifulSoup(data, "html.parser")

links = soup.find_all('a', class_='colorbox_gallery')

imgs=[]

for link in links:

imgs.append(link.find('img'))

for img in imgs:

print(img)

假设find('a')储存在dat里

img = dat.find('img')

href = img.get('src')

href 即为链接

另外,图片是二进制的,用'wb'形式打开,写入要用 f.write(respons.content)