Tensorflow代码转到Keras

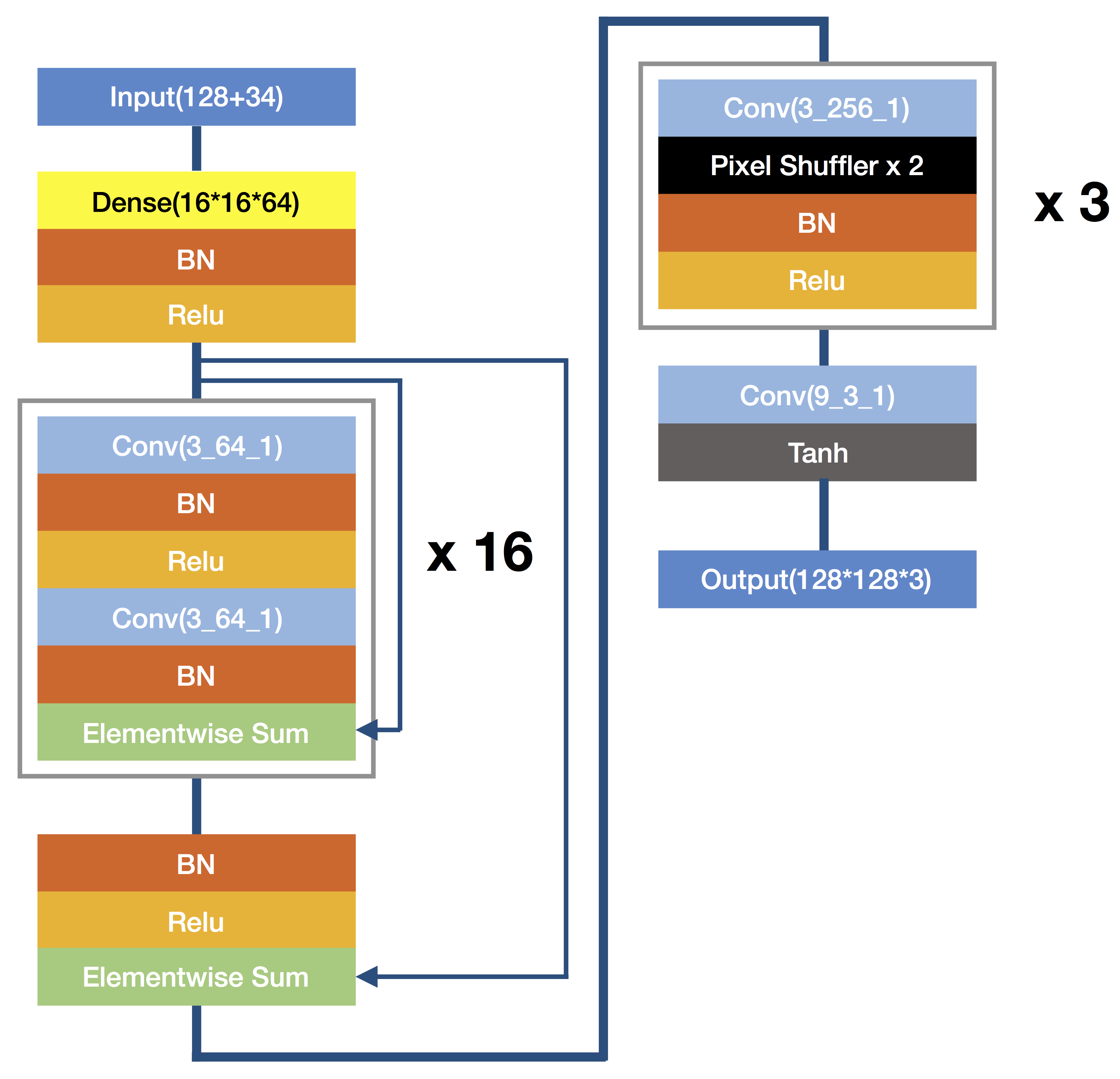

我现在有Tensortflow的代码和结构图如下,这是AC-GAN中生成器的部分,我用原生tf是可以跑通的,但当我想转到Keras中实现却很头疼。

def batch_norm(inputs, is_training=is_training, decay=0.9):

return tf.contrib.layers.batch_norm(inputs, is_training=is_training, decay=decay)

# 构建残差块

def g_block(inputs):

h0 = tf.nn.relu(batch_norm(conv2d(inputs, 3, 64, 1, use_bias=False)))

h0 = batch_norm(conv2d(h0, 3, 64, 1, use_bias=False))

h0 = tf.add(h0, inputs)

return h0

# 生成器

# batch_size = 32

# z : shape(32, 128)

# label : shape(32, 34)

def generator(z, label):

with tf.variable_scope('generator', reuse=None):

d = 16

z = tf.concat([z, label], axis=1)

h0 = tf.layers.dense(z, units=d * d * 64)

h0 = tf.reshape(h0, shape=[-1, d, d, 64])

h0 = tf.nn.relu(batch_norm(h0))

shortcut = h0

for i in range(16):

h0 = g_block(h0)

h0 = tf.nn.relu(batch_norm(h0))

h0 = tf.add(h0, shortcut)

for i in range(3):

h0 = conv2d(h0, 3, 256, 1, use_bias=False)

h0 = tf.depth_to_space(h0, 2)

h0 = tf.nn.relu(batch_norm(h0))

h0 = tf.layers.conv2d(h0, kernel_size=9, filters=3, strides=1,

padding='same', activation=tf.nn.tanh, name='g', use_bias=True)

return h0

在Keras中都是先构建Model,在Model中不断的加层

但上面的代码却是中间包含着新旧数据的计算,比如

....

shortcut = h0

....

h0 = tf.add(h0, shortcut)

难不成我还要构建另外一个model作为中间输出吗?

大佬们帮帮忙解释下,或者能不能给出翻译到Keras中应该怎么写