树莓派小车,求怎么循迹?

刚开始是打算用红外循迹,通过不同颜色反射的红外线不同来循迹,但是问商家说漫反射光电开关不能用,有考虑过用opencv

循迹是指通过识别地面上的标记或颜色来控制小车移动方向的技术。对于树莓派小车的循迹,你可以尝试以下几种方法:

使用红外线传感器:你可以安装红外线传感器在小车底部,通过检测地面上红外线的反射程度,来判断小车应该往哪个方向移动。你可以通过编程来控制小车根据传感器的检测结果进行运动。

使用摄像头和图像处理:你可以配备一台摄像头,然后使用OpenCV库进行图像处理,通过识别地面上的标记或颜色来判断小车的移动方向。你可以编写算法来分析摄像头捕捉到的图像,在图像中找到颜色标记或轮廓,然后根据识别结果控制小车的运动。

另外,在树莓派小车的循迹过程中,你可以使用Python编程语言进行开发。Python具有简单易用的语法和强大的图像处理和机器视觉库,适合实现循迹功能。

关于具体的实现细节和代码示例,你可以参考一些开源项目、教程或资料,例如GitHub上的树莓派小车项目和相关论坛中的讨论。同时,你也可以参考树莓派官方文档以及OpenCV文档,了解更多有关使用树莓派和OpenCV进行循迹的方法和代码示例。

- 这有个类似的问题, 你可以参考下: https://ask.csdn.net/questions/7780799

- 你也可以参考下这篇文章:opencv的图片处理:缩小尺寸为原图的一半【自己练习存档,没有参考价值,多看其他大神代码,谢谢】

- 除此之外, 这篇博客: 用opencv的人脸识别来分辨明星似乎不太灵光中的 二、从素材准备到预测 部分也许能够解决你的问题, 你可以仔细阅读以下内容或跳转源博客中阅读:

- 下载图片

男女明星各一组,每组2人,每人各9张。图片找小冰要的,度娘也一样。 - 图片规整化

训练时要求图片尺寸统一,而下载来的图片大大小小,所以要统一到同一尺寸. 用简单粗暴的方法,查出所有图片的最小尺寸,然后将所有照片调到此尺寸。不能变形,不足的方向补黑边。

def unifyImg(strSource): #read all images and get the smallest size x_min = 9999 y_min = 9999 img_Bingbing = list() img_file_list = os.listdir(strSource) for i in range(0,len(img_file_list)): strFileName = os.path.join(strSource,img_file_list[i]) if os.path.isfile(strFileName): img = cv2.imread(strFileName) if img is None: print('Failed when read file %s'%strFileName) continue # record size if img.shape[0] < y_min: y_min = img.shape[0] if img.shape[1] < x_min: x_min = img.shape[1] #store img and its name img_Bingbing.append([img,img_file_list[i]]) #Resize images frontFaceCascade = cv2.CascadeClassifier(r'.\Lib\site-packages\cv2\data\haarcascade_frontalface_default.xml') profileFaceCascade = cv2.CascadeClassifier(r'.\Lib\site-packages\cv2\data\haarcascade_profileface.xml') for i in range(0,len(img_Bingbing)): img = img_Bingbing[i][0] #check if face is identified in the image frontFaces = frontFaceCascade.detectMultiScale(img) profileFaces = profileFaceCascade.detectMultiScale(img) if len(frontFaces) == 0: if len(profileFaces) == 0: print("no face detected on img: %s, deleted."%img_Bingbing[i][1]) continue else: x = profileFaces[0][0] y = profileFaces[0][1] w = profileFaces[0][2] h = profileFaces[0][3] else: x = frontFaces[0][0] y = frontFaces[0][1] w = frontFaces[0][2] h = frontFaces[0][3] img_new = img[y:y+h,x:x+w] cv2.imwrite(strSource + "\\Face_only\\" + img_Bingbing[i][1],img_new) # 98% make sure the output image size is smaller than the min_x or min_y rate_x = x_min / img.shape[1] * 0.98 rate_y = y_min / img.shape[0] * 0.98 rate = min(rate_x, rate_y) img_new = cv2.resize(img, None, fx = rate, fy = rate) if len(img_new.shape) == 2: # grey img_template = np.zeros((y_min, x_min),np.uint8) else: img_template = np.zeros((y_min, x_min, 3),np.uint8) h = img_new.shape[0] w = img_new.shape[1] img_template[0:h,0:w] = img_new cv2.imwrite(strSource + "\\Unified\\" + img_Bingbing[i][1],img_template) strSource = r'C:\Waley\Personal\Learning\Python\Image\TryFaceIdentify' unifyImg(strSource)- 挑一部分统一好尺寸的图片整合进一个列表,用来训练

src = list() lables = list() # LiYiFeng for i in range(0,6): img = cv2.imread(strSource + "\\unified\\LYF_" + str(i+1) + ".jpg",cv2.IMREAD_GRAYSCALE) if img is None: print("Fail to read images") sys.exit(0) else: src.append(img) lables.append(0) #YiYangQianXi for i in range(0,6): img = cv2.imread(strSource + "\\unified\\YYQX_" + str(i+1) + ".jpg",cv2.IMREAD_GRAYSCALE) if img is None: print("Fail to read images") sys.exit(0) else: src.append(img) lables.append(1) names = {"0":"LiYiFeng","1":"YiYangQianXi"} #train recognizer recognizer_eigenface.train(src,np.array(lables)) recognizer_fisher.train(src, np.array(lables)) recognizer_LBPH.train(src, np.array(lables))- 识别

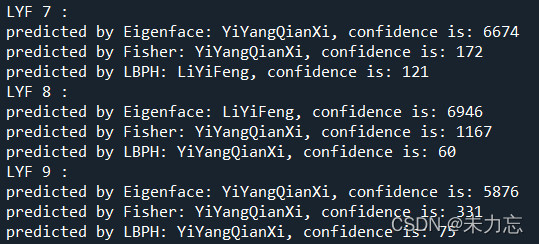

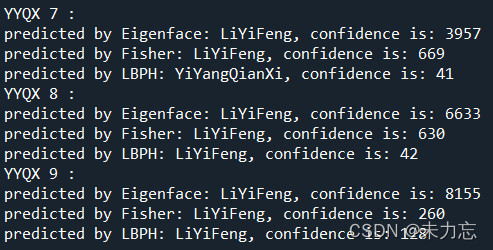

for i in range(7,10): img = cv2.imread(strSource + "\\unified\\LYF_" + str(i) + '.jpg',cv2.IMREAD_GRAYSCALE) print("LYF %d : "%i) lable, confidence = recognizer_eigenface.predict(img) print("predicted by Eigenface: %s, confidence is: %d"%(names[str(lable)],confidence)) lable, confidence = recognizer_fisher.predict(img) print("predicted by Fisher: %s, confidence is: %d"%(names[str(lable)],confidence)) lable, confidence = recognizer_LBPH.predict(img) print("predicted by LBPH: %s, confidence is: %d"%(names[str(lable)],confidence)) print("\n\n") for i in range(7,10): img = cv2.imread(strSource + "\\unified\\YYQX_" + str(i) + '.jpg',cv2.IMREAD_GRAYSCALE) print("YYQX %d : "%i) lable, confidence = recognizer_eigenface.predict(img) print("predicted by Eigenface: %s, confidence is: %d"%(names[str(lable)],confidence)) lable, confidence = recognizer_fisher.predict(img) print("predicted by Fisher: %s, confidence is: %d"%(names[str(lable)],confidence)) lable, confidence = recognizer_LBPH.predict(img) print("predicted by LBPH: %s, confidence is: %d"%(names[str(lable)],confidence))- 以上可以合并为一个函数,方便换人试验:

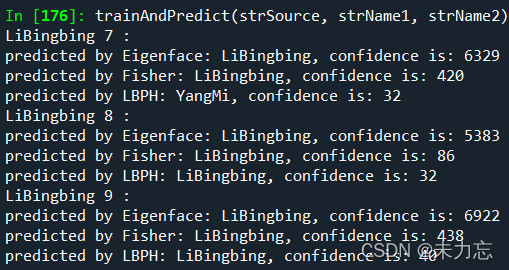

def trainAndPredict(strSource, strName1, strName2): # prepare training dataset src = list() lables = list() for i in range(0,6): img = cv2.imread(strSource + "\\unified\\" + strName1 + '_' + str(i+1) + ".jpg",cv2.IMREAD_GRAYSCALE) if img is None: print("Fail to read images") sys.exit(0) else: src.append(img) lables.append(0) for i in range(0,6): img = cv2.imread(strSource + "\\unified\\" + strName2 + '_' + str(i+1) + ".jpg",cv2.IMREAD_GRAYSCALE) if img is None: print("Fail to read images") sys.exit(0) else: src.append(img) lables.append(1) names = {"0":strName1,"1":strName2} #set up creator recognizer_eigenface = cv2.face.EigenFaceRecognizer_create() recognizer_fisher = cv2.face.FisherFaceRecognizer_create() recognizer_LBPH = cv2.face.LBPHFaceRecognizer_create() #train recognizer recognizer_eigenface.train(src,np.array(lables)) recognizer_fisher.train(src, np.array(lables)) recognizer_LBPH.train(src, np.array(lables)) for i in range(7,10): img = cv2.imread(strSource + "\\unified\\" + strName1 + '_' + str(i) + '.jpg',cv2.IMREAD_GRAYSCALE) print("%s %d : "%(strName1,i)) lable, confidence = recognizer_eigenface.predict(img) print("predicted by Eigenface: %s, confidence is: %d"%(names[str(lable)],confidence)) lable, confidence = recognizer_fisher.predict(img) print("predicted by Fisher: %s, confidence is: %d"%(names[str(lable)],confidence)) lable, confidence = recognizer_LBPH.predict(img) print("predicted by LBPH: %s, confidence is: %d"%(names[str(lable)],confidence)) print("\n\n") for i in range(7,10): img = cv2.imread(strSource + "\\unified\\" + strName2 + '_' + str(i) + '.jpg',cv2.IMREAD_GRAYSCALE) print("%s %d : "%(strName2,i)) lable, confidence = recognizer_eigenface.predict(img) print("predicted by Eigenface: %s, confidence is: %d"%(names[str(lable)],confidence)) lable, confidence = recognizer_fisher.predict(img) print("predicted by Fisher: %s, confidence is: %d"%(names[str(lable)],confidence)) lable, confidence = recognizer_LBPH.predict(img) print("predicted by LBPH: %s, confidence is: %d"%(names[str(lable)],confidence)) #strSource = r'C:\Waley\Personal\Learning\Python\Image\TryFaceIdentify' #strName1 = 'LYF' #strName2 = 'YYQX' strSource = r'C:\Waley\Personal\Learning\Python\Image\FaceIdentify' strName1 = 'LiBingbing' strName2 = 'YangMi' trainAndPredict(strSource, strName1, strName2)- 结果让人意外

对于两位男明星,简直没有任何识别率。

这两位明星都不太熟悉,会不会是网站也是AI标的,标错了呢?哈哈。

换了两位女明星试验,效果似乎还行:

- 下载图片

- 您还可以看一下 英特尔老师的英特尔 OpenCV 初级认证课程课程中的 高斯模糊小节, 巩固相关知识点