Spark在pycharm上运行出错

Spark本地部署好spark后,利用anaconda安装好了pyspark了 在命令行中可以正常运行,但用pycharm使用anaconda虚拟环境后,使用collect算子后报错。

Traceback (most recent call last):

File "C:\Users\98579\Desktop\Spark_learning\test.py", line 12, in

mapRDD.collect()

File "D:\Anaconda3\envs\pyspark\lib\site-packages\pyspark\rdd.py", line 1814, in collect

sock_info = self.ctx._jvm.PythonRDD.collectAndServe(self._jrdd.rdd())

File "D:\Anaconda3\envs\pyspark\lib\site-packages\pyspark\rdd.py", line 5441, in _jrdd

wrapped_func = _wrap_function(

File "D:\Anaconda3\envs\pyspark\lib\site-packages\pyspark\rdd.py", line 5243, in _wrap_function

return sc._jvm.SimplePythonFunction(

TypeError: 'JavaPackage' object is not callable

请求帮忙谢谢

结合ChatGPT和自己的理解作答:

这个错误可能是因为pycharm中的环境变量没有正确配置,无法找到pyspark库。

可以尝试以下步骤来解决问题:

- 在pycharm中打开项目,进入File->Settings->Project: your_project_name->Project Interpreter,确认Anaconda环境已正确设置为解释器。

- 点击“+”按钮,搜索pyspark并安装该库。

- 如果发现没有安装Java Development Kit(JDK),需要到官网下载安装。

- 确保环境变量已正确配置,包括JAVA_HOME和SPARK_HOME等变量。

- 在代码中添加以下两行,可以帮助pycharm正确找到pyspark库:

import findspark findspark.init() - 如果还是无法解决问题,可以尝试将pyspark库从anaconda环境中复制到pycharm项目中的lib文件夹下,并在pycharm中添加该库。

希望以上建议能够帮助您解决问题

- 建议你看下这篇博客👉 :Pycharm基于Anaconda配置PySpark

- 除此之外, 这篇博客: pycharm利用pyspark远程连接spark集群中的 2 测试 部分也许能够解决你的问题, 你可以仔细阅读以下内容或跳转源博客中阅读:

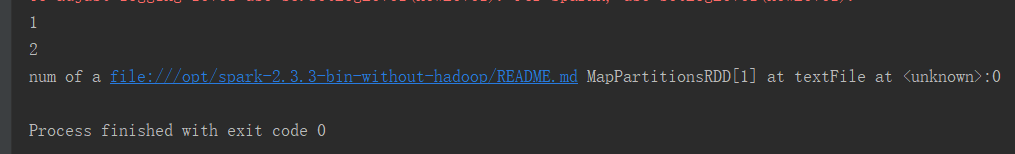

import os from pyspark import SparkContext from pyspark import SparkConf # os.environ['SPARK_HOME'] = r"F:\big_data\spark-2.3.3-bin-without-hadoop" os.environ['SPARK_HOME'] = r"F:\big_data\spark-2.3.3-bin-hadoop2.6" # os.environ["HADOOP_HOME"] = r"F:\big_data\hadoop-2.6.5" # os.environ['JAVA_HOME'] = r"F:\Java\jdk1.8.0_144" print(0) conf = SparkConf().setMaster("spark://spark_cluster:7077").setAppName("test") sc = SparkContext(conf=conf) print(1) logData = sc.textFile("file:///opt/spark-2.3.3-bin-without-hadoop/README.md").cache() print(2) print("num of a",logData) sc.stop()