多因素logistic回归中只有参照组P<0.05

其他哑变量均大于0.05,没有统计学差异,这种情况输出结果怎么解读不知道你这个问题是否已经解决, 如果还没有解决的话:

- 这篇文章讲的很详细,请看:机器学习03:使用logistic回归方法解决猫狗分类问题

- 除此之外, 这篇博客: 机器学习(三)Logistic回归中的 4.2 使用梯度上升找到最佳参数 部分也许能够解决你的问题, 你可以仔细阅读以下内容或者直接跳转源博客中阅读:

添加Sigmoid函数和Logistic回归梯度上升算法代码:

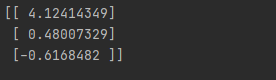

import numpy as np import matplotlib.pyplot as plt ''' 函数说明:读取数据集 Returns: dataMat:包含了数据特征值的矩阵 dataLabel:数据集的标签 ''' def loadDataSet(): dataMat = [] labelMat = [] fr = open('D:\迅雷下载\machinelearninginaction\Ch05\\testSet.txt') for line in fr.readlines(): # strip()方法不传参时表示移除字符串首尾空格 lineArr = line.strip().split() dataMat.append([1.0, float(lineArr[0]), float(lineArr[1])]) labelMat.append(int(lineArr[2])) fr.close() return dataMat, labelMat """ 函数说明:绘制数据集 Parameters: 无 Returns: 无 """ def plotDataSet(): dataMat, labelMat = loadDataSet() # 转换成numpy的array数组 dataArr = np.array(dataMat) n = np.shape(dataMat)[0] # 正样本 xcord1 = []; ycord1 = [] # 负样本 xcord2 = []; ycord2 = [] # 根据数据集标签进行分类 for i in range(n): if int(labelMat[i]) == 1: xcord1.append(dataArr[i, 1]); ycord1.append(dataArr[i, 2]) else: xcord2.append(dataArr[i, 1]); ycord2.append(dataArr[i, 2]) fig = plt.figure() ax = fig.add_subplot(111) ax.scatter(xcord1, ycord1, s=20, c='red', marker='s', alpha=.5) ax.scatter(xcord2, ycord2, s=20, c='green', alpha=.5) plt.title('DataSet') plt.xlabel('x'); plt.ylabel('y') plt.show() ''' 函数说明:sigmoid函数 Parameters: inx:经历一次前向传播还没有激活的值 Returns: 激活后的值 ''' def sigmoid(inx): return 1.0 / (1 + np.exp(-inx)) """ 函数说明:梯度上升算法 Parameters: dataMatIn:数据集 classLabels:数据标签 Returns: weights:求得的权重数组(最优参数) """ def gradAscent(dataMatIn, classLabels): # 将输入数据集转换为矩阵 dataMatrix = np.mat(dataMatIn) LabelsMat = np.mat(classLabels).transpose() m, n = np.shape(dataMatrix) # 初始化系数矩阵,因为数据集为m行n列,所以权重矩阵为n行 weights = np.ones((n, 1)) # 迭代次数 maxIter = 500 # 步长(学习率) alpha = 0.001 for k in range(maxIter): h = sigmoid(dataMatrix * weights) # 计算误差 error = LabelsMat - h # 更新权重矩阵 weights = weights + alpha * dataMatrix.transpose() * error return weights.getA() if __name__ == '__main__': dataArr, labelMat = loadDataSet() print(gradAscent(dataArr, labelMat)) if __name__ == '__main__': plotDataSet()运行结果:

该结果即为使用梯度上升算法找到的一组回归系数。

如果你已经解决了该问题, 非常希望你能够分享一下解决方案, 写成博客, 将相关链接放在评论区, 以帮助更多的人 ^-^