求大lao的解释一下过程

S = 'Pame'

for i in range(len(S)):

print(S[-i],end="")

解释什么?逆序输出?

上面贴出来打代码,大致意思是,以切片的形式打印出字符串索引为:0,-1,-2,-3 位置处的字符,它们依次对应字符:'P','e','m','a',所以上面的代码就会打印出:Pema 这几个字符, S[index] 可以取出字符串S中对应索引位置处的字符;

如果要实现逆序打印,把for循环内的print打印语句修改下,把i变为i+1即可,以对应逆序打印各字符时它们位置的负索引。

测试代码如下:

参考链接:

http://c.biancheng.net/view/2178.html

S = 'Pame' # 把字符串'Pame'赋值给变量S

# 遍历 0到len(S)-1 (字符串长度减1)之间的每个数,即0,1,2,3

for i in range(len(S)):

#print("\ni=",i)

# https://blog.csdn.net/Zombie_QP/article/details/125063501

# 使用切片的形式打印字符串中索引为-0(即0),-1,-2,-3的字符

# 索引0对应 'P',索引-1对应'e',索引-2对应'm',索引-3对应'a'

# 所以在循环内,下面这行代码会打印出 "Pema"

print(S[-i],end="")

# 如果要逆序打印,i后面加个1即可

# print(S[-(i+1)],end="")

- 这篇文章:解决焦虑,避免下一个理想主义者陨落 也许能够解决你的问题,你可以看下

- 除此之外, 这篇博客: 稀疏数据和嵌入简介中的 构建输入管道 部分也许能够解决你的问题, 你可以仔细阅读以下内容或跳转源博客中阅读:

首先,我们来配置输入管道,以将数据导入 TensorFlow 模型中。我们可以使用以下函数来解析训练数据和测试数据(格式为 TFRecord),然后返回一个由特征和相应标签组成的字典。

def _parse_function(record): """Extracts features and labels. Args: record: File path to a TFRecord file Returns: A `tuple` `(labels, features)`: features: A dict of tensors representing the features labels: A tensor with the corresponding labels. """ features = { "terms": tf.VarLenFeature(dtype=tf.string), # terms are strings of varying lengths "labels": tf.FixedLenFeature(shape=[1], dtype=tf.float32) # labels are 0 or 1 } parsed_features = tf.parse_single_example(record, features) terms = parsed_features['terms'].values labels = parsed_features['labels'] return {'terms':terms}, labels为了确认函数是否能正常运行,我们为训练数据构建一个

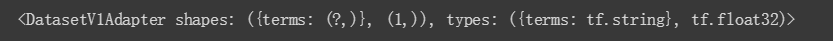

TFRecordDataset,并使用上述函数将数据映射到特征和标签。# Create the Dataset object. ds = tf.data.TFRecordDataset(train_path) # Map features and labels with the parse function. ds = ds.map(_parse_function) ds运行以下单元,以从训练数据集中获取第一个样本。

n = ds.make_one_shot_iterator().get_next() sess = tf.Session() sess.run(n)现在,我们构建一个正式的输入函数,可以将其传递给 TensorFlow Estimator 对象的 train() 方法。

# Create an input_fn that parses the tf.Examples from the given files, # and split them into features and targets. def _input_fn(input_filenames, num_epochs=None, shuffle=True): # Same code as above; create a dataset and map features and labels. ds = tf.data.TFRecordDataset(input_filenames) ds = ds.map(_parse_function) if shuffle: ds = ds.shuffle(10000) # Our feature data is variable-length, so we pad and batch # each field of the dataset structure to whatever size is necessary. ds = ds.padded_batch(25, ds.output_shapes) ds = ds.repeat(num_epochs) # Return the next batch of data. features, labels = ds.make_one_shot_iterator().get_next() return features, labels