【进程已结束,退出代码0】

python爬出的内容放进CSV时,发现没有爬出

import requests

from bs4 import BeautifulSoup as bes

import time

import pandas as pd

import csv

import time

import datetime

with open('lianjia.csv',mode='w',newline='',encoding='ANSI') as f_in:

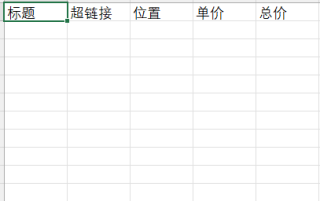

reader=csv.DictWriter(f_in,fieldnames=[

'标题',

'超链接',

'位置',

'单价',

'总价'])

reader.writeheader()

#爬取数据

url='https://cd.lianjia.com/ershoufang/'

header={

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0.0 Safari/537.36'

}

resp=requests.get(url,headers=header)

soup=bes(resp.text,'lxml')

# print(data)

#清洗数据

li_list=soup.select('body>.content >.leftContent>.sellListContent>.clear LOGCLICKDATA')

# body > .content > .leftContent > .sellListContent > .clear

for i in li_list:

title=i.select_one('li>.info clear>.title>a').text

print(title)

# 超链接

href = i.select_one('li > .info > .title > a').attrs['href']

print(href)

# 位置

area = i.select_one('li > .info > .flood > .positionInfo ').text.replace(' ', '')

print(area)

# 单价

unit_price = i.select_one('li > div.info > div.priceInfo > div.unitPrice > span').text

print(unit_price)

# 总价

total_price = i.select_one('li > div.info.clear > div.priceInfo > div.totalPrice > span').text

print(total_price)

dict={

'标题':'title',

'超链接':'href',

'位置':'area',

'单价':'unit_pricee',

'总价':'total_price'

}

reader.writerows(dict)

【进程已结束,退出代码0】

尝试排查错误,但是在没有导出csv时,每次print的结果都是空白值,不知道时哪里的爬取除了问题

顺便结果【进程已结束,退出代码0】这个千古难题吧~~

页面数据都是动态加载的,这样光明正大的用网址爬,爬了个寂寞。要么用selenium,要么按F12看看想要的数据的url是什么再get