机器学习一元线性回归错误

当我再用一元线性回归画散点图的时候报了这个错

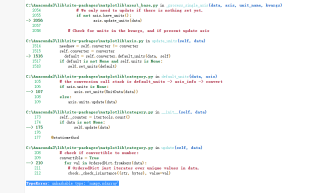

import pandas as pd

import matplotlib.pyplot as plt

df=pd.read_csv('chage.csv')

df['Deaths']=df['Deaths'].str.replace(',','')

df['Total']=df['Total'].str.replace(',','')

from sklearn import linear_model

df['Deaths'].astype(float)

df['Total'].astype(float)

# 设定x和y的值

x = df[['Deaths']]

y = df[['Total']]

regr=linear_model.LinearRegression()

# 拟合fit()

regr.fit(x,y)

print(regr.coef_) # 权重

print(regr.intercept_)

import matplotlib.pyplot as plt

plt.rcParams['font.sans-serif'] = ['SimHei']

plt.rcParams['axes.unicode_minus'] = False

plt.xlabel('Deaths')

plt.ylabel('Total')

# 画出原始点:散点图scatter

plt.scatter(x, y, color='black')

# 画出预测点,预测点的宽度为1,颜色为红色

plt.scatter(x, regr.predict(x), color='red',linewidth=1)

plt.legend(['原始值','预测值'], loc = 2)

plt.show()

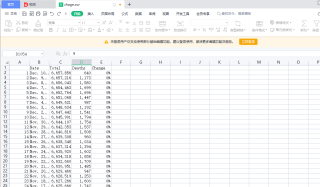

这是我的数据集

- 这篇文章讲的很详细,请看:多元线性回归解决机器学习问题的一般方法

PS:问答VIP年卡 【限时加赠:IT技术图书免费领】,了解详情>>> https://vip.csdn.net/askvip?utm_source=1146287632