爬虫隐藏网址 寻找f12页面html的url

如何寻找element页面对应的url

有一些网站F12 element页面的html和浏览器框里的url 不一样

例如:

同城网 驴妈妈网

我发现这两个网站就是这样 我用request请求浏览器框里url输出得到的html比对f12页面的html发现是不一样的,得到的html似乎是resource里的html

这种情况应该如何处理?有没有什么好的方法找到F12 element里的url?

这是我写的代码 输出的content确实和f12的element不一样 我在终端输出搜索相关航班信息搜不到并且比较输出也并不一样

import requests

import bs4

import pandas

from bs4 import BeautifulSoup

url = "https://www.ly.com/flights/itinerary/oneway/SHA-PEK?date=2022-07-20&from=%E4%B8%8A%E6%B5%B7&to=%E5%8C%97%E4%BA%AC&fromairport=&toairport=&p=465&childticket=0,0%22"

headers = {'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.0.0 Safari/537.36',

'Cookie': '__ftoken=SiOymoDFD1SbAMyp9SOl4TTo1x%2F6tIR8MM0ephJaV9o3jPaqKHles2cKFozvdKWPAVj6sfPo6CrmUnzKddGMFw%3D%3D; __ftrace=429083e3-cb32-4edc-a880-85431c3e3d0e; H5CookieId=a4192dd5-db42-4183-b17c-fc3d0d2c16f1; NewProvinceId=10; NCid=146; NewProvinceName=%E6%B2%B3%E5%8C%97; NCName=%E7%9F%B3%E5%AE%B6%E5%BA%84; Hm_lvt_64941895c0a12a3bdeb5b07863a52466=1657543447,1657615535; Hm_lpvt_64941895c0a12a3bdeb5b07863a52466=1657615535; 17uCNRefId=RefId=6928722&SEFrom=baidu&SEKeyWords=; TicketSEInfo=RefId=6928722&SEFrom=baidu&SEKeyWords=; CNSEInfo=RefId=6928722&tcbdkeyid=&SEFrom=baidu&SEKeyWords=&RefUrl=https%3A%2F%2Fwww.baidu.com%2Flink%3Furl%3DtedwxiynUMMKHrEjvoa3gKE4SGUfH0dEHZ_slvaTw6_%26wd%3D%26eqid%3D9fd8a5af000014d30000000362cd34ab; __tctma=144323752.1657543354871212.1657543354191.1657546827075.1657615534400.3; __tctmu=144323752.0.0; __tctmz=144323752.1657615534400.3.1.utmccn=(organic)|utmcmd=organic|utmEsl=gb2312|utmcsr=baidu|utmctr=; longKey=1657543354871212; __tctrack=0; searchSteps=2; AIRPLANECITYNAME=%25E5%258C%2597%25E4%25BA%25AC%2524%25E9%25A6%2599%25E6%25B8%25AF%2524%25241%25242022-07-30%2524%25242022-07-30%25240%2524%2524%2524%2524%2524%2524; fSearchHis=%25E5%258C%2597%25E4%25BA%25AC%2524%25E9%25A6%2599%25E6%25B8%25AF%2524%25241%25242022-07-30%2524%2524%25240%2524%2524%2524%2524%2524%2524; qdid=-9999; Hm_lvt_c6a93e2a75a5b1ef9fb5d4553a2226e5=1657543354,1657615544; AS_100=9b0f38f0a8f50abef9c7c011cfeae62607c54b0d64f61423e7e58b049c273622924a198954aea547ede1aa429652c38b4ef51a151a314a1ef46ed4178f394856; AS_101=9b0f38f0a8f50abef9c7c011cfeae62607c54b0d64f61423e7e58b049c273622924a198954aea547ede1aa429652c38b4ef51a151a314a1ef46ed4178f394856; AS_102=9b0f38f0a8f50abef9c7c011cfeae62607c54b0d64f61423e7e58b049c273622924a198954aea547ede1aa429652c38b4ef51a151a314a1ef46ed4178f394856; ecid=9b0f38f0a8f50abef9c7c011cfeae62607c54b0d64f61423e7e58b049c273622924a198954aea547ede1aa429652c38b4ef51a151a314a1ef46ed4178f394856; __tctmc=144323752.31187257; __tctmd=144323752.737325; Hm_lpvt_c6a93e2a75a5b1ef9fb5d4553a2226e5=1657615732; route=d3088eef331955fc8bfb0a19fe71f6f4; __sd_captcha_id=713cfd59-a49b-41a7-a212-4efa1f2374a7; tracerid=nologin-1657762899880',

'Origin': 'https://www.ly.com',

'Host': 'www.ly.com',

'Referer': 'https://www.ly.com/flights/itinerary/oneway/SHA-PEK?date=2022-07-20&from=%E4%B8%8A%E6%B5%B7&to=%E5%8C%97%E4%BA%AC&fromairport=&toairport=&p=465&childticket=0,0%22'

}

webpage_source = requests.get(url,headers = headers).text

content = BeautifulSoup(webpage_source, 'html5lib')

print(content)

因为有些网站中的内容是通过js代码读取外部json数据来动态更新的。

用F12查看到的代码是通过js动态更新后的内容,

而用requests只能获取网页的静态源代码,不会执行页面中的js, 动态更新的内容就取不到。

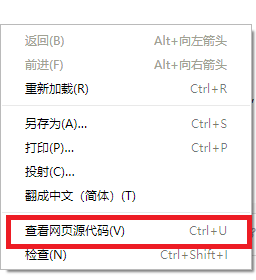

要查看网页的静态源代码应该在页面上点击右键,右键菜单中选 "查看网页源代码"。

这样看到的才是网页的静态源代码。

如果这个网页的静态源代码中有你需要爬取的内容,就说明该页面没有动态内容,可以用requests爬取。

否则就说明该页面的内容是动态更新的,对于动态更新的内容要用selenium 来爬取。

或者是通过F12控制台分析页面数据加载的链接,找到真正json数据的地址进行爬取。

网站不是一个url请求就将所有数据都请求到的,你要看你所需要的数据是那个url返回的

一般都是js动态生成的网页

f12 - 从网络那一栏找,刷新抓取url

你好,这个情况我也遇到过,你需要这样做,吧request到的网页通过debug方式保存下来,然后可以进行格式化,这样方便查找需要定位的元素,出现这种情况的原因是很多网站他有反爬的功能,因此,你用我这种办法就可以了,