panda中如何把append()换成pd.concat()

问题遇到的现象和发生背景

刷到一个题是把 append 换成 concat 来去掉警告,不太清楚怎么处理,想请教一下! 非常感谢!

问题相关代码,请勿粘贴截图

import pandas as pd

import random

df_norms = pd.DataFrame()

df = pd.DataFrame({'Individual_Scale' : ['Scale A','Scale B','Scale C','Scale D','Scale E','Scale F'],

'Score' : [random.random()*100 for _ in range(6)]})

for scale in ['Scale A','Scale B','Scale C','Scale D','Scale E','Scale F']:

df_norms[scale] = sorted([random.random()*100 for _ in range(100)])

df = df.append(pd.Series([scale,random.random()*100], index = df.columns), ignore_index=True)

append相当于追加数据,这个函数在新版本中弃用了,concat是把两个相似的df组合起来,你直接新建一个列相同的df就行:

for scale in ['Scale A','Scale B','Scale C','Scale D','Scale E','Scale F']:

df_norms[scale] = sorted([random.random()*100 for _ in range(100)])

data = pd.DataFrame({'Individual_Scale' : [scale],'Score':random.random()*100})

df = pd.concat([df, data], ignore_index = True)

for scale in ['Scale A','Scale B','Scale C','Scale D','Scale E','Scale F']:

df_norms[scale] = sorted([random.random()*100 for _ in range(100)])

d = pd.DataFrame([[scale,random.random()*100]], columns = df.columns)

df = pd.concat([df, d], ignore_index = True)

df.append会有警告吗?至少在我的环境中运行没有警告。append 换成 concat,大约是这样的吧?

>>> import numpy as np

>>> import random

>>> df_norms = pd.DataFrame()

>>> df = pd.DataFrame({'Individual_Scale' : ['Scale A','Scale B','Scale C','Scale D','Scale E','Scale F'],

'Score' : [random.random()*100 for _ in range(6)]})

>>> d = {'Individual_Scale': list(), 'Score': list()}

>>> for scale in ['Scale A','Scale B','Scale C','Scale D','Scale E','Scale F']:

df_norms[scale] = sorted([random.random()*100 for _ in range(100)])

d['Individual_Scale'].append(scale)

d['Score'].append(random.random()*100)

>>> df = pd.concat([df, pd.DataFrame(d)], ignore_index=True)

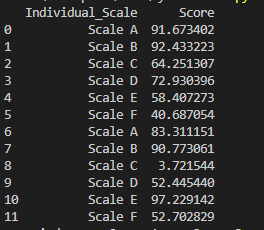

>>> df

Individual_Scale Score

0 Scale A 11.172429

1 Scale B 60.556072

2 Scale C 83.491132

3 Scale D 29.711069

4 Scale E 3.881941

5 Scale F 37.477554

6 Scale A 33.567009

7 Scale B 19.329035

8 Scale C 23.453353

9 Scale D 74.918504

10 Scale E 48.306329

11 Scale F 79.210148

>>> df_norms

Scale A Scale B Scale C Scale D Scale E Scale F

0 0.081019 1.051657 0.097027 0.290851 1.913932 0.068956

1 0.145290 2.009734 0.249679 1.844872 3.686597 0.397679

2 1.183374 2.135974 0.715719 1.985853 5.343396 0.464777

3 2.681695 4.154178 0.979934 4.583160 5.428240 0.568354

4 2.704504 7.787778 1.785705 4.763334 7.310502 2.048466

.. ... ... ... ... ... ...

95 97.530636 97.993456 95.150912 94.789002 95.547736 93.160846

96 98.519154 98.129680 95.495692 95.644331 97.053258 93.254725

97 98.880739 98.895117 95.604106 97.643440 97.625797 95.610542

98 99.083063 99.516753 96.752881 99.645669 99.104014 95.693973

99 99.999940 99.529845 97.521243 99.863202 99.353511 97.750327

[100 rows x 6 columns]

pd.concat((df,pd.DataFrame({'Individual_Scale':['Scale A','Scale B','Scale C','Scale D','Scale E','Scale F'],

'Score':sorted([random.random()*100 for _ in range(6)]) }))).reset_index()