pyspark报错,无法解决!

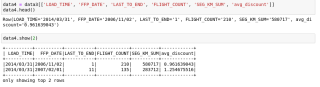

# dataTransformed = pd.DataFrame(columns=['L','R','F','M','C'])

tmp = {

'L':pd.to_datetime(data4['LOAD_TIME']) - pd.to_datetime(data4['FFP_DATE']), #需要先转化datatime格式,再进行相减

'R':data4['LAST_TO_END'],

'F':data4['FLIGHT_COUNT'],

'M':data4['SEG_KM_SUM'],

'C':data4['avg_discount'],

}

dataTransformed = pd.DataFrame(data=tmp,columns=['L','R','F','M','C'])

#将timedelta转换成int类型

dataTransformed['L'] = (dataTransformed['L']/np.timedelta64(1,'D')).astype(int)

dataTransformed.head()

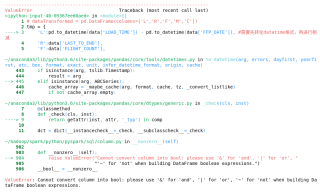

有谁了解这个错误如何解决呀!不知道该怎么解决这个错!

错误提示信息如下:

ValueError: Cannot convert column into bool: please use '&' for 'and', '|' for 'or', '~' for 'not' when building DataFrame boolean expressions.

参照

pd.DataFrame(pd.to_datetime(time_df['END_TIME']) - pd.to_datetime(time_df['START_TIME']))

有帮助望采纳