救!An internal error occurred during: "Map/Reduce location status updater"

eclipse上运行Map/Reduce插件报错,hadoop运行起来看上去没什么问题

An internal error occurred during: "Map/Reduce location status updater".

Cannot read the array length because "jobs" is null

配置文件

core-site-xml:

```xml

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>/D:/hadoop-2.8.3/data</value>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

hdfs-site-xml:

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/D:/hadoop-2.8.3/data/namenode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/D:/hadoop-2.8.3/data/datanode</value>

</property>

<property>

<name>dfs.namenode.http-address</name>

<value>0.0.0.0:50070</value>

<description>

The address and the base port where the dfs namenode web ui will listen on.

</description>

</property>

<property>

<name>dfs.datanode.address</name>

<value>0.0.0.0:50010</value>

<!-- Default Port: 50010. -->

</property>

<property>

<name>dfs.datanode.http.address</name>

<value>0.0.0.0:50075</value>

<!-- Default Port: 50075. -->

</property>

<property>

<name>dfs.datanode.ipc.address</name>

<value>0.0.0.0:50020</value>

<!-- Default Port: 50020. -->

</property>

<property>

<name>dfs.secondary.http.address</name>

<value>0.0.0.0:50090</value>

<!-- Default Port: 50090. -->

</property>

<property>

<name>dfs.http.address</name>

<value>localhost:9870</value>

</property>

</configuration>

yarn-site-xml:

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hahoop.mapred.ShuffleHandler</value>

</property>

</configuration>

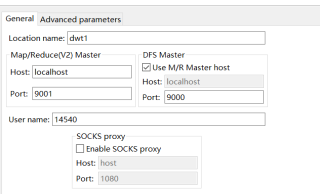

mapred-site-xml:

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapred.job.tracker</name>

<value>localhost:9001</value>

<!-- Default Port: 9001. -->

</property>

<property>

<name>mapred.job.tracker.http.address</name>

<value>0.0.0.0:50030</value>

<!-- Default Port: 50030. -->

</property>

<property>

<name>mapred.task.tracker.http.address</name>

<value>0.0.0.0:50060</value>

<!-- Default Port: 50060. -->

</property>

</configuration>

```

出现这个错误是你hdfs上的文件是空的,你只需要

hadoop fs -mkdir /input/

创建一个文件就不会报错了。

PS:问答VIP年卡 【限时加赠:IT技术图书免费领】,了解详情>>> https://vip.csdn.net/askvip?utm_source=1146287632