学习一个爬虫知识遇到TypeError: object of type 'NoneType' has no len()问题,求明白人指点迷津

本来程序没有什么问题,在添加了如下代码时,程序开始报错

soup = BeautifulSoup(html,"html.parser")

for item in soup.find_all('div',class_="item"): #查找符合要求的字符串,形成列表

data = []

item = str(item)

link = re.findall(findLink,item)[0] #re库通过正则表达式查找指定的字符串

print(link)

#print(item)

出现如下问题:

Traceback (most recent call last):

File "F:\pythonProject\test2\t2.py", line 79, in <module>

main()

File "F:\pythonProject\test2\t2.py", line 23, in main

datalist = getData(baseurl)

File "F:\pythonProject\test2\t2.py", line 40, in getData

soup = BeautifulSoup(html,"html.parser")

File "E:\pythonProject\lib\site-packages\bs4\__init__.py", line 312, in __init__

elif len(markup) <= 256 and (

TypeError: object of type 'NoneType' has no len()

代码如下,可能有点乱

from test1 import t1

#引入自定义的模块

print(t1.add(3,4))

import urllib.request

import re

import bs4

from bs4 import BeautifulSoup

import sys

import urllib

import xlwt

import sqlite3

def main():

baseurl = "https://movie.douban.com/top250?start=0"

#1.爬取网页

datalist = getData(baseurl)

savepath = ".\\doubanTop250.xls"

#2.解析数据

#3.保存数据

#saveData(savepath)

#askURL("https://movie.douban.com/top250?start=")

findLink = re.compile(r'<a href="(.*?)">')

#1.爬取网页

def getData(baseurl):

datalist = []

for i in range(0,1): #调用获取页面信息的函数*10次

url = baseurl + str(i*25)

html = askURL(url) #保存返回值

# 2.解析数据

soup = BeautifulSoup(html,"html.parser")

for item in soup.find_all('div',class_="item"): #查找符合要求的字符串,形成列表

data = []

item = str(item)

link = re.findall(findLink,item)[0] #re库通过正则表达式查找指定的字符串

print(link)

#print(item)

return datalist

#得到指定一个URL的网页内容

def askURL(url):

head = { #模拟浏览器头部信息,向豆瓣发送消息

"User-Agent": "Mozilla / 5.0(Windows NT 10.0;Win64; x64) AppleWebKit / 537.36(KHTML, likeGecko) Chrome / 97.0.4692.71 Safari / 537.36Edg / 97.0.107255"

}

#用户代理表示告诉豆瓣服务器我们是什么类型的机器,浏览器(本质上是告诉浏览器,我们可以接受什么内容的文件内容)

request = urllib.request.Request(url,headers = head)

html = ""

try:

response = urllib.request.urlopen(request)

html = response.read().decode("utf-8")

print(html)

except urllib.error.URLError as e:

if hasattr(e,"code"):

print(e.code)

if hasattr(e,"reason"):

print(e.reason)

return html

#3.保存数据

def saveData(savepath):

print("save...")

if __name__ == "__main__":

main()

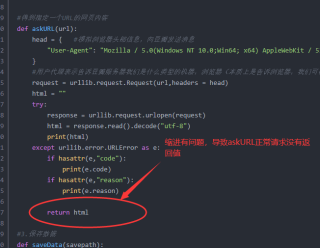

askURL的return html缩进有问题,放到except外

def askURL(url):

head = { #模拟浏览器头部信息,向豆瓣发送消息

"User-Agent": "Mozilla / 5.0(Windows NT 10.0;Win64; x64) AppleWebKit / 537.36(KHTML, likeGecko) Chrome / 97.0.4692.71 Safari / 537.36Edg / 97.0.107255"

}

#用户代理表示告诉豆瓣服务器我们是什么类型的机器,浏览器(本质上是告诉浏览器,我们可以接受什么内容的文件内容)

request = urllib.request.Request(url,headers = head)

html = ""

try:

response = urllib.request.urlopen(request)

html = response.read().decode("utf-8")

#print(html)

except urllib.error.URLError as e:

if hasattr(e,"code"):

print(e.code)

if hasattr(e,"reason"):

print(e.reason)

return html