哪里有问题,帮我看看?

import requests

第一页

https://www.dy2018.com/2/index_1.html

第二页

https://www.dy2018.com/2/index_2.html

第三页

https://www.dy2018.com/2/index_3.html

class DYspider:

"""

电影天堂爬虫

功能:动作片前五页的内容保存

"""

def init(self) :

# 1.请求构造地址

self.url = "https://www.dy2018.com/2/index_{}.html"

def get_response(self,url):

response = requests.get(url).content.decode()

return response

def save_page(self, content, page_num):

"""保存页面"""

file_name = f"第{page_num}页.html"

with open(f'./电影天堂/{file_name}', 'w', encoding='utf-8') as f:

# 写入文件

f.write(content)

def run(self, page=5):

"""爬虫启动函数"""

# 1. 构造地址

for pn in range(page):

# pn的取值0 1 2 3 4

url = self.url.format(pn+1)

# 发送请求并获取响应

content = self.get_response(url)

# 保存全站内容

self.save_page(content, pn + 1)

if name == "main":

# 实例化

DYspider().run()

import requests

第一页

https://www.dy2018.com/2/index_1.html

第二页

https://www.dy2018.com/2/index_2.html

第三页

https://www.dy2018.com/2/index_3.html

class DYspider:

"""

电影天堂爬虫

功能:动作片前五页的内容保存

"""

def init(self) :

# 1.请求构造地址

self.url = "https://www.dy2018.com/2/index_{}.html"

def get_response(self,url):

response = requests.get(url).content.decode()

return response

def save_page(self, content, page_num):

"""保存页面"""

file_name = f"第{page_num}页.html"

with open(f'./电影天堂/{file_name}', 'w', encoding='utf-8') as f:

# 写入文件

f.write(content)

def run(self, page=5):

"""爬虫启动函数"""

# 1. 构造地址

for pn in range(page):

# pn的取值0 1 2 3 4

url = self.url.format(pn+1)

# 发送请求并获取响应

content = self.get_response(url)

# 保存全站内容

self.save_page(content, pn + 1)

if name == "main":

# 实例化

DYspider().run()

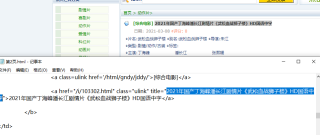

requests.get(url).content.decode()这句要加上headers,来源和user-agent要加上,要不被反扒获取不到数据。用gbk解码内容,第一页网址也有问题,需要判断下是否第一页,改下面就可以了

import requests

class DYspider:

def __init__(self):

# 1.请求构造地址

self.url = "https://www.dy2018.com/2/index_{}.html"

def get_response(self,url):

response = requests.get(url,headers={

'user-agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.110 Safari/537.36 Edg/96.0.1054.62',

'referer':url

}).content.decode('gbk')#用gbk解码

return response

def save_page(self, content, page_num):

file_name = f"第{page_num}页.html"

with open(f'./电影天堂/{file_name}', 'w', encoding='utf-8') as f:

# 写入文件

f.write(content)

def run(self, page=5):

# 1. 构造地址

for pn in range(page):

# pn的取值0 1 2 3 4

if pn==0:

url = self.url.replace('_{}','')#没有index_1.html这种链接,会404

else:

url = self.url.format(pn+1)

# 发送请求并获取响应

content = self.get_response(url)

# 保存全站内容

self.save_page(content, pn + 1)

if __name__ == "__main__":

# 实例化

DYspider().run()