keras利用callback获取的每个batch的acc数据精度不足

我想利用callback收集训练过程中每个batch的acc数据

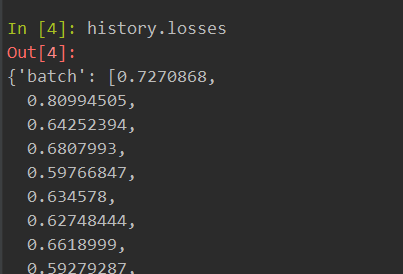

但按batch收集的acc只有小数点后两位,按epoch收集的acc数据与就保留了小数点后很多位,按batch和epoch收集的loss数据都保留了小数点后很多位

代码如下

class LossHistory(callbacks.Callback):

def on_train_begin(self, logs={}):

self.losses = {'batch': [], 'epoch': []}

self.accuracy = {'batch': [], 'epoch': []}

self.val_loss = {'batch': [], 'epoch': []}

self.val_acc = {'batch': [], 'epoch': []}

def on_batch_end(self, batch, logs={}):

self.losses['batch'].append(logs.get('loss'))

self.accuracy['batch'].append(logs.get('acc'))

self.val_loss['batch'].append(logs.get('val_loss'))

self.val_acc['batch'].append(logs.get('val_acc'))

def on_epoch_end(self, batch, logs={}):

self.losses['epoch'].append(logs.get('loss'))

self.accuracy['epoch'].append(logs.get('acc'))

self.val_loss['epoch'].append(logs.get('val_loss'))

self.val_acc['epoch'].append(logs.get('val_acc'))

def loss_plot(self, loss_type):

iters = range(len(self.losses[loss_type]))

plt.figure()

# acc

plt.plot(iters, self.accuracy[loss_type], 'r', label='train acc')

# loss

plt.plot(iters, self.losses[loss_type], 'g', label='train loss')

if loss_type == 'epoch':

# val_acc

plt.plot(iters, self.val_acc[loss_type], 'b', label='val acc')

# val_loss

plt.plot(iters, self.val_loss[loss_type], 'k', label='val loss')

plt.grid(True)

plt.xlabel(loss_type)

plt.ylabel('acc-loss')

plt.legend(loc="upper right")

plt.show()

class Csr:

def __init__(self,voc):

self.model = Sequential()

#B*L

self.model.add(Embedding(voc.num_words,

300,

mask_zero = True,

weights = [voc.index2emb],

trainable = False))

#B*L*256

self.model.add(GRU(256))

#B*256

self.model.add(Dropout(0.5))

self.model.add(Dense(1, activation='sigmoid'))

#B*1

self.model.compile(loss='binary_crossentropy',

optimizer='rmsprop',

metrics=['accuracy'])

print('compole complete')

def train(self, x_train, y_train, b_s=50, epo=10):

print('training.....')

history = LossHistory()

his = self.model.fit(x_train,

y_train,

batch_size=b_s,

epochs=epo,

callbacks=[history])

history.loss_plot('batch')

print('training complete')

return his, history

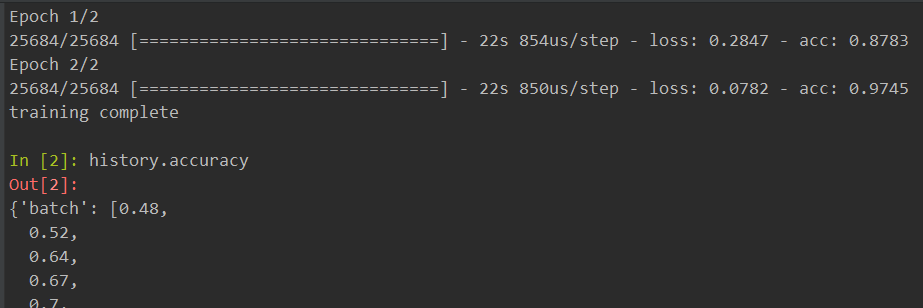

程序运行结果如下:

- 关于该问题,我找了一篇非常好的博客,你可以看看是否有帮助,链接:Keras中如何利用回调函数Callback设置训练前先验证一遍验证集数据

如果你已经解决了该问题, 非常希望你能够分享一下解决方案, 以帮助更多的人 ^-^