Python爬虫,用scrapy框架和scrapy-splash爬豆瓣读书设置代理不起作用,有没有大神帮忙看一下,谢谢

用scrapy框架和scrapy-splash爬豆瓣读书设置代理不起作用,代理设置后还是提示需要登录。

settings内的FirstSplash.middlewares.FirstsplashSpiderMiddleware':823和FirstsplashSpiderMiddleware里面的 request.meta['splash']['args']['proxy'] = "'http://112.87.69.226:9999"是从网上搜的,代理ip是从【西刺免费代理IP】这个网站随便找的一个,scrapy crawl Doubanbook打印出来的text

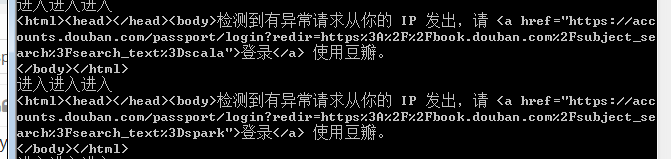

内容是需要登录。有没有大神帮忙看看,感谢!运行结果:

spider代码:

name = 'doubanBook'

category = ''

def start_requests(self):

serachBook = ['python','scala','spark']

for x in serachBook:

self.category = x

start_urls = ['https://book.douban.com/subject_search', ]

url=start_urls[0]+"?search_text="+x

self.log("开始爬取:"+url)

yield SplashRequest(url,self.parse_pre)

def parse_pre(self, response):

print(response.text)

中间件代理配置:

class FirstsplashSpiderMiddleware(object):

def process_request(self, request, spider):

print("进入代理")

print(request.meta['splash']['args']['proxy'])

request.meta['splash']['args']['proxy'] = "'http://112.87.69.226:9999"

print(request.meta['splash']['args']['proxy'])

settings配置:

BOT_NAME = 'FirstSplash'

SPIDER_MODULES = ['FirstSplash.spiders']

NEWSPIDER_MODULE = 'FirstSplash.spiders'

ROBOTSTXT_OBEY = False

#docker+scrapy-splash配置

FEED_EXPORT_ENCODING='utf-8'

#doucer服务地址

SPLASH_URL = 'http://127.0.0.1:8050'

# 去重过滤器

DUPEFILTER_CLASS = 'scrapy_splash.SplashAwareDupeFilter'

# 使用Splash的Http缓存

HTTPCACHE_STORAGE = 'scrapy_splash.SplashAwareFSCacheStorage'

#此处配置改为splash自带配置

SPIDER_MIDDLEWARES = {

'scrapy_splash.SplashDeduplicateArgsMiddleware': 100,

}

#下载器中间件改为splash自带配置

DOWNLOADER_MIDDLEWARES = {

'scrapy_splash.SplashCookiesMiddleware': 723,

'scrapy_splash.SplashMiddleware': 725,

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware': 810,

'FirstSplash.middlewares.FirstsplashSpiderMiddleware':823,

}

# 模拟浏览器请求头

DEFAULT_REQUEST_HEADERS = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/62.0.3202.89 Safari/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

}

你的爬虫代理可能不是高匿的,还有就是免费的有时候操作的多了,已经被人拉黑了。你可以访问http://www.httpbin.org/ip检查代理是否生效