spark sql join 1个driver很慢,其他很快,怀疑数据倾斜,帮解决可有偿私

问题:用spark sql 表join自身,执行过程就1个driver很慢,其他很快

代码:

spark.sql("select /*+ MERGEJOIN(t2) */ t1.bsm,t2.bsm " +

" from temp t1" +

" join temp t2 on t1.index != t2.index and st_overlaps(t1.geometry,t2.geometry)").show()

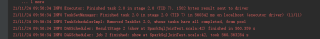

结果:

import org.apache.spark.sql.SparkSession

import org.gdal.ogr.Geometry

import org.locationtech.geomesa.spark.GeoMesaSparkKryoRegistrator

import org.locationtech.geomesa.spark.jts._

import org.locationtech.jts.geom.MultiPolygon

object SparkSqlJoinTest {

def main(args: Array[String]):Unit = {

val spark: SparkSession = SparkSession.builder()

.appName("testSpark")

.config("spark.serializer", "org.apache.spark.serializer.KryoSerializer")

.config("spark.kryo.registrator", classOf[GeoMesaSparkKryoRegistrator].getName)

.master("local[*]")

// .config("spark.sql.adaptive.enabled",true)

// .config("spark.sql.adaptive.coalescePartitions.enabled",true)

// .config("spark.sql.adaptive.coalescePartitions.minPartitionNum",1)

// .config("spark.sql.adaptive.skewJoin.enabled",true)

// .config("spark.sql.adaptive.skewJoin.skewedPartitionFactor",5)

.config("spark.sql.crossJoin.enabled",true)

.getOrCreate()

.withJTS

val geonamesParams = Map(

"hbase.zookeepers" -> "master",

"hbase.catalog" -> "test"

)

val geonamesDF = spark.read

.format("geomesa")

.options(geonamesParams)

.option("geomesa.feature", "test2000")

.load()

geonamesDF.where("layername='test2000'").createOrReplaceTempView("temp")

try{

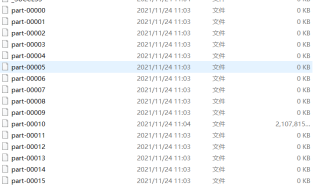

spark.sql("select /*+ skewjoin(t2) */ t1.bsm,t2.bsm from temp t1 join temp t2 ").rdd.saveAsTextFile("D:/test")

// spark.sql("select /*+ skewjoin(t2) */ t1.bsm,t2.bsm " +

// " from temp t1" +

// " join temp t2 on t1.index != t2.index and st_overlaps(t1.geometry,t2.geometry)").show()

}finally {

print("a")

}

}

}

你把它复制到记事本发给我