nltk统计在超过5000条记录中出现的词

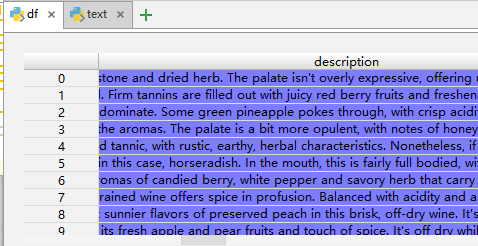

dataframe中某一列为文本

现在想统计出在超过5000条记录中出现的词,有什么函数可以用吗?

处于没有思路的状态...

先去除标点符号,调用字符串函数split()切分成单词列表,调用nltk.FreqDist()进行统计

import nltk

def my_split(s):

# 去除文章中的标点符号

# 可以自己定义标点符号

temp = [",",".","?","!",":",";","-","#","$","%","^","&","*","(",")","_","=","+","{","}","[","]","\\","|","'","<",">","~","`"]

for e in temp:

s = s.replace(e," ")

return s

test_str = my_split("I have a dream. A nice dream")

freq_words = dict( nltk.FreqDist(test_str.split() ) )

print(freq_words)

输出结果:

{'I': 1, 'have': 1, 'a': 1, 'dream': 2, 'A': 1, 'nice': 1}

更细节的操作还有大小写、去除词根,不想要停用词的话可以去除停用词,nltk库都有相应的类可以调用