spark提交任务 cassadra报错,guava版本低于16。0.1,但是检查jar包是19.0的spark本地local模式跑没问题

Caused by: com.datastax.driver.core.exceptions.DriverInternalError: Detected incompatible version of Guava in the classpath. You need 16.0.1 or higher.

canssadra版本3.x的,查看里面的guava包是19.0的,但是还报这个错。求大神帮忙看yi'x

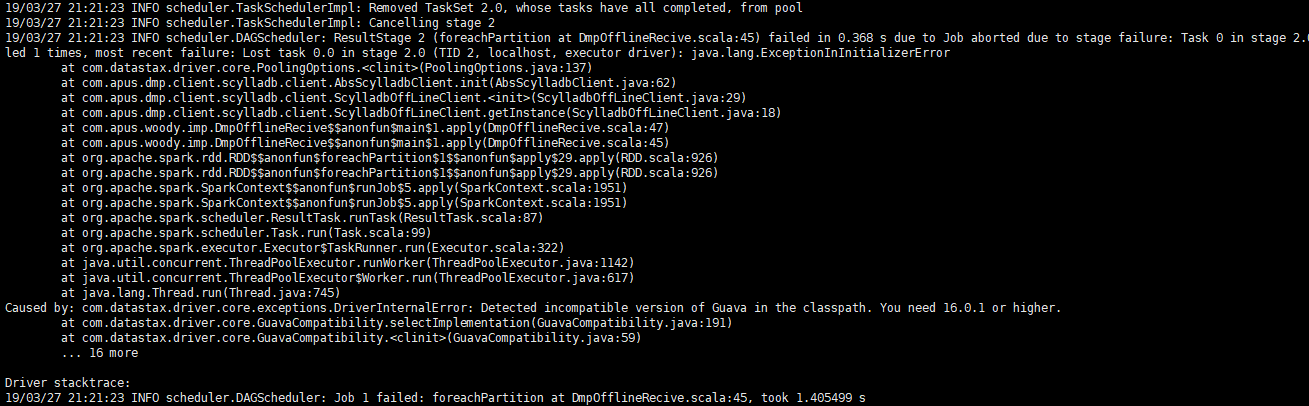

报错代码:

19/03/27 21:21:23 INFO scheduler.DAGScheduler: ResultStage 2 (foreachPartition at DmpOfflineRecive.scala:45) failed in 0.368 s due to Job aborted due to stage failure: Task 0 in stage 2.0 failed 1 times, most recent failure: Lost task 0.0 in stage 2.0 (TID 2, localhost, executor driver): java.lang.ExceptionInInitializerError

at com.datastax.driver.core.PoolingOptions.<clinit>(PoolingOptions.java:137)

at com.apus.dmp.client.scylladb.client.AbsScylladbClient.init(AbsScylladbClient.java:62)

at com.apus.dmp.client.scylladb.client.ScylladbOffLineClient.<init>(ScylladbOffLineClient.java:29)

at com.apus.dmp.client.scylladb.client.ScylladbOffLineClient.getInstance(ScylladbOffLineClient.java:18)

at com.apus.woody.imp.DmpOfflineRecive$$anonfun$main$1.apply(DmpOfflineRecive.scala:47)

at com.apus.woody.imp.DmpOfflineRecive$$anonfun$main$1.apply(DmpOfflineRecive.scala:45)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$29.apply(RDD.scala:926)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$29.apply(RDD.scala:926)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:1951)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:1951)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:87)

at org.apache.spark.scheduler.Task.run(Task.scala:99)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:322)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: com.datastax.driver.core.exceptions.DriverInternalError: Detected incompatible version of Guava in the classpath. You need 16.0.1 or higher.

at com.datastax.driver.core.GuavaCompatibility.selectImplementation(GuavaCompatibility.java:191)

at com.datastax.driver.core.GuavaCompatibility.<clinit>(GuavaCompatibility.java:59)

... 16 more

--conf "spark.driver.extraClassPath=lib/guava-19.0.jar" 试试这个,但是我只是在local模式下成功了

是因为有个jar包使用了maven-shade-plugin插件打包,里面打进去了更低的Guava类版本,我项目中是因为 hive-exec-2.3.6.jar 这个包,解决办法是找到依赖这个包的模块,然后使用maven-shade-plugin的relocation配置,改一个类路径就可以了