python requests 在linux服务器获取数据返回403,但,本地可以获取

最近学习python爬虫,遇到个不太好解决的问题,

望好心耐心解答,

以下代码

import requests

import time

import re

from bs4 import BeautifulSoup

v = input("URL:")

head = {

'method': 'GET',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/85.0.4183.121 Safari/537.36',

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'sec-fetch-site': 'none',

'sec-fetch-mode': 'navigate',

'sec-fetch-dest': 'document',

'accept-language': 'zh-CN,zh;q=0.9',

'connection': 'close'

}

requestCoding = {}

def getPage(url, start):

try:

resp = requests.get(url, headers=head, timeout=(5, 10))

resp.encoding = getEncoding(resp)

if resp.status_code == 200:

html = BeautifulSoup(resp.text, 'html.parser')

page = html.find('head')

print(abs(round(start - time.time(), 2)), page)

else:

print(abs(round(start - time.time(), 2)), resp.status_code)

except Exception as message:

print('requests Error:', message, '耗时:', abs(round(start - time.time(), 2)), )

def getEncoding(resp):

try:

appCode = resp.apparent_encoding

htmlCode = requests.utils.get_encodings_from_content(resp.text)[0]

if appCode:

requestCoding['appCode'] = appCode

requestCoding['htmlCode'] = htmlCode

if appCode != htmlCode:

iso = re.search('ISO-8859', appCode, re.IGNORECASE)

win = re.search('Windows', appCode, re.IGNORECASE)

if iso:

return 'GBK'

if win:

return 'utf-8'

else:

return appCode

else:

return htmlCode

except:

requestCoding['manualSet'] = 'utf-8'

return requestCoding['manualSet']

if __name__ == '__main__':

main_start = time.time()

print(getPage(v, main_start))

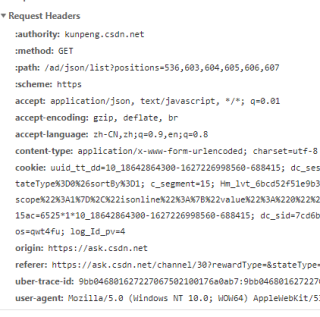

可能是requests伪造的头部信息不全。

要在headers中添加抓包时的请求头参数

比如

url = "https://xxxxxxxxxxx"

headers={

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.87 UBrowser/6.2.4098.3 Safari/537.36',

'Host' : 'xxxxxxxxxxx',

'Origin' : 'xxxxxxxxxxxxx',

'Referer' : 'xxxxxxxxxxxxxx',

'Cookie': 'xxxxxxxxxxxxxxxx'

}

res = requests.get(url,headers=headers)

其中请求头的参数 'User-Agent','Host','Origin', 'Referer','Cookie'可以在浏览器的f12控制台的Network中看到

几行代码的事为啥写这么多呢

估计被反爬了,请求头加cookie,refer试试

我也遇到过,换header, 代理ip都没有用的那种,楼主解决问题了吗