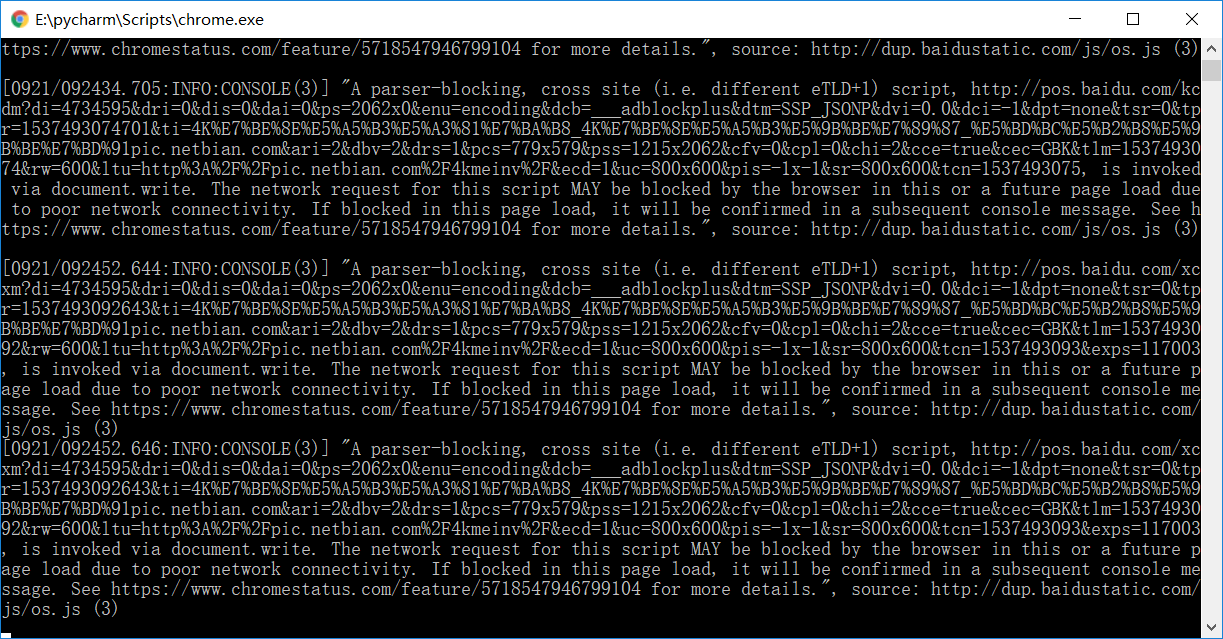

python3 selenium 爬取妹子图 求大神告知chromedriver显示的是什么错误

重复爬取第一页的内容

只想知道这个错误是错在哪了。。如果大神愿指导一二感激不尽!

源码:

import requests

import os

from selenium import webdriver

from selenium.common.exceptions import TimeoutException

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.common.by import By

from selenium.webdriver.support.wait import WebDriverWait

from pyquery import PyQuery as pq

chrome_options = webdriver.ChromeOptions()

chrome_options.add_argument('--headless')

browser = webdriver.Chrome(chrome_options = chrome_options)

wait = WebDriverWait(browser,10)

def main():

for page in range(1,21):

get_page(page)

browser.close()

def get_image(url):

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.92 Safari/537.36',

'Host':'pic.netbian.com'

}

return requests.get(url,headers = headers).content

def get_page(page):

print('正在爬取第',page,'页')

try:

url = 'http://pic.netbian.com/4kmeinv/'

browser.get(url)

input = wait.until(

EC.text_to_be_present_in_element((By.CSS_SELECTOR, '#main > div.page > input[type="text"]'), str(page)))

submit = wait.until(

EC.element_to_be_clickable((By.CSS_SELECTOR, '#jump-url')))

input.send_keys(page)

submit.click()

wait.until(EC.presence_of_element_located((By.CSS_SELECTOR, '#main > div.slist > ul')))

doc = pq(browser.page_source)

items = doc('#main > div.slist > ul > li').items()

for item in items:

src = item.find('.a .img').attr('src')

content = get_image(src)

save(content)

except TimeoutException:

get_page(page)

def save(content):

path = 'E:' + os.path.sep + 'meizitu'

os.mkdir(path)

try:

for i in range(21*21):

img_path = path + os.path.sep + 'i.png'

with open(img_path,'wb') as f:

f.write(content)

print('第',i,'张保存成功')

except Exception:

print('保存失败')

if name == '__main__':

main()

你的代码没有缩进。没缩进的python代码,谁都读不懂。