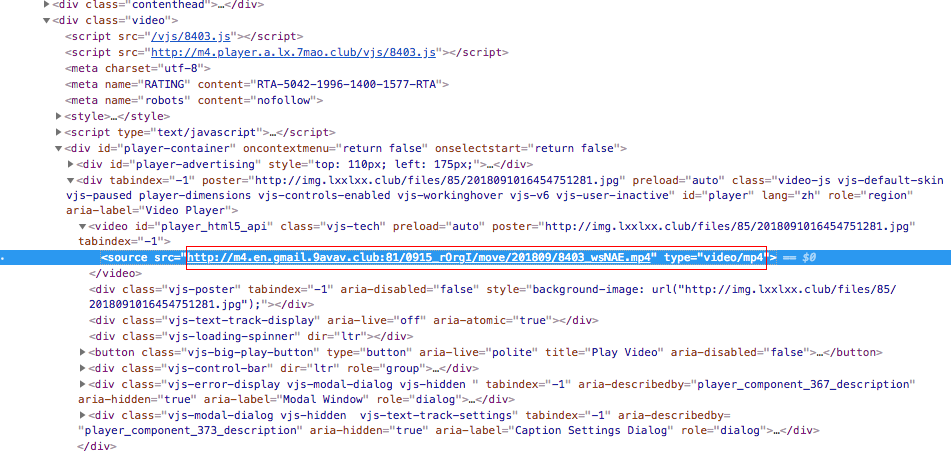

抓取网页里的链接地址

我试图抓取上图的链接可是返回以下错误

Traceback (most recent call last):

File "/Users/euro3/Library/Preferences/PyCharmCE2018.1/scratches/scratch_7.py", line 65, in

add_index_url(url,num,file_object)

File "/Users/euro3/Library/Preferences/PyCharmCE2018.1/scratches/scratch_7.py", line 51, in add_index_url

write_url=get_download_url(html)

File "/Users/euro3/Library/Preferences/PyCharmCE2018.1/scratches/scratch_7.py", line 14, in get_download_url

url_a=td.find('source')

AttributeError: 'NoneType' object has no attribute 'find'

下面是我运行的代码:

import sys

import urllib2

import os

import chardet

from bs4 import BeautifulSoup

import time

reload(sys)

sys.setdefaultencoding("utf-8")

def get_download_url(broken_html):

soup=BeautifulSoup(broken_html,'html.parser')

fixed_html=soup.prettify()

td=soup.find('video',attrs={'id':'player_html5_api'})

url_a=td.find('source')

url_a=url_a['src']

return url_a

def get_title(broken_html):

soup=BeautifulSoup(broken_html,'html.parser')

fixed_html=soup.prettify()

title=soup.find('h1')

title=title.string

return title

def url_open(url):

req=urllib2.Request(url)

req.add_header('User-Agent','Mozilla/5.0')

response=urllib2.urlopen(url)

html=response.read()

return html

def add_index_url(url,num,file_object):

for i in range(1,num):

new_url=url+str(i)

print("----------------------start scraping page"+str(i)+"---------------------")

html=url_open(new_url)

time.sleep(1)

soup=BeautifulSoup(html,'html.parser')

fixed_html=soup.prettify()

a_urls=soup.find_all('div',attrs={'class':'pic'})

host="http://zhs.lxxlxx.com"

for a_url in a_urls:

a_url=a_url.find('a')

a_url=a_url.get('href')

a_url=host+a_url

print(a_url)

html=url_open(a_url)

#html=unicode(html,'GBK').encode("utf-8")

html=html.decode('utf-8')

write_title=get_title(html)

write_url=get_download_url(html)

file_object.write(write_title+"\n")

file_object.write(write_url+"\n")

if __name__=='__main__':

url="http://zhs.lxxlxx.com/new/"

filename="down_load_url.txt"

num=int(raw_input("please input the page num you want to download:"))

num=num+1

if os.path.exists(filename):

file_object=open(filename,'w+')

else:

os.mknod(filename)

file_object=open(filename,'w+')

add_index_url(url,num,file_object)

print("----------------------scraping finish--------------------------")

file_object.close()

有谁可以帮忙修改一下,本人自学python中所以不是很明白哪里出错

有可能是soup没有查到video标签,然后返回了None给td,建议你在执行soup.find后判断返回值有没有数据

修改下个函数,然后在打印结果里查看是否能找到td的结果,然后再查看td的结果是否和有source结果

def get_download_url(broken_html):

soup=BeautifulSoup(broken_html,'html.parser')

print (soup)

fixed_html=soup.prettify()

td=soup.find('video',attrs={'id':'player_html5_api'})

print (td)

url_a=td.find('source')

url_a=url_a['src']

return url_a