hdfs 上传/下载文件报错

上传 不报错。但是hdfs 上面的文件大小为0.

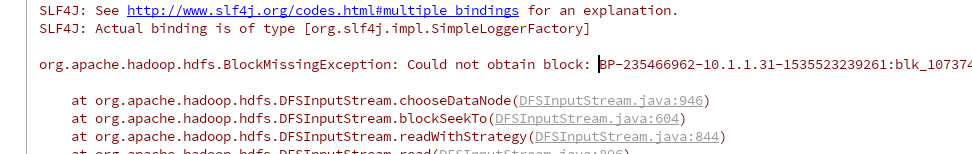

下载的时候

org.apache.hadoop.hdfs.BlockMissingException: Could not obtain block: BP-127181180-172.17.0.2-1526283881280:blk_1073741825_1001 file=/wing/LICENSE.txt

at org.apache.hadoop.hdfs.DFSInputStream.chooseDataNode(DFSInputStream.java:946)

at org.apache.hadoop.hdfs.DFSInputStream.blockSeekTo(DFSInputStream.java:604)

at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:844)

at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:896)

at java.io.DataInputStream.read(DataInputStream.java:100)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:85)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:59)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:119)

at org.apache.hadoop.fs.FileUtil.copy(FileUtil.java:366)

at org.apache.hadoop.fs.FileUtil.copy(FileUtil.java:338)

at org.apache.hadoop.fs.FileUtil.copy(FileUtil.java:289)

at org.apache.hadoop.fs.FileSystem.copyToLocalFile(FileSystem.java:2030)

at hadoop.hdfs.HdfsTest.testDownLoad(HdfsTest.java:76)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:50)

at org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

at org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:47)

at org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:325)

at org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:78)

at org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:57)

at org.junit.runners.ParentRunner$3.run(ParentRunner.java:290)

at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:71)

at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:288)

at org.junit.runners.ParentRunner.access$000(ParentRunner.java:58)

at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:268)

at org.junit.runners.ParentRunner.run(ParentRunner.java:363)

at org.junit.runner.JUnitCore.run(JUnitCore.java:137)

at com.intellij.junit4.JUnit4IdeaTestRunner.startRunnerWithArgs(JUnit4IdeaTestRunner.java:68)

at com.intellij.rt.execution.junit.IdeaTestRunner$Repeater.startRunnerWithArgs(IdeaTestRunner.java:47)

at com.intellij.rt.execution.junit.JUnitStarter.prepareStreamsAndStart(JUnitStarter.java:242)

at com.intellij.rt.execution.junit.JUnitStarter.main(JUnitStarter.java:70)

求各位大佬帮忙看看。环境是在docker 上部署的, 1主2丛。jps查看 datanode和nameNode 都是活的。

网页访问50070 也能进入web 也,里面显示的节点也是活的。

在服务器上用 hdfs dfs -put /get 操作可以成功。

java 代码如下:

private FileSystem createClient() throws IOException {

//设置程序执行的用户为root

System.setProperty("HADOOP_USER_NAME","root");

//指定NameNode的地址

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://Master:9000");

//创建hdfs客户端

return FileSystem.get(conf);

}

@Test

public void testMkdir() throws IOException {

FileSystem client = createClient();

//创建目录

client.mkdirs(new Path(PATH));

//关闭客户端

client.close();

}

@Test

public void testUpload() throws Exception{

FileSystem client = createClient();

//构造输入流

InputStream in = new FileInputStream("d:\\bcprov-jdk16-1.46.jar");

//构造输出流

OutputStream out = client.create(new Path(PATH +"/bcprov.jar"));

//上传

IOUtils.copyBytes(in, out ,1024);

//关闭客户端

client.close();

}

@Test

public void testDownLoad() throws Exception{

FileSystem client = createClient();

//构造输入流

FSDataInputStream in = client.open(new Path("hdfs://Master:9000/wing/LICENSE.txt"));

//构造输出流

OutputStream out = new FileOutputStream("d:\\hello.txt");

//下载

IOUtils.copyBytes(in, System.out, 1024, false);

IOUtils.closeStream(in);

IOUtils.closeStream(out);

//关闭客户端

client.close();

}

其他api 调用是可以的,比如创建目录。

public static void main(String[] args) throws Exception

{

System.setProperty("javax.xml.parsers.DocumentBuilderFactory",

"com.sun.org.apache.xerces.internal.jaxp.DocumentBuilderFactoryImpl");

// 初始化HDFS文件系统;

Configuration cfg = new Configuration();

cfg.set("hadoop.job.ugi", "hadoop,supergroup"); // "hadoop,supergroup"

cfg.set("fs.default.name", "hdfs://master:9000"); // "hdfs://master:9000"

cfg.set("mapred.job.tracker", "hdfs://master:9001"); // "hdfs://master:9001"

cfg.set("dfs.http.address", "master:50070"); // "master:50070"

FileSystem fs = FileSystem.get(cfg);

String localPath = "d:\\temp";

String hdfsPath = "/tmp/query_ret/7/attempt_201007151545_0024_r_000000_0";

fs.copyToLocalFile(new Path(hdfsPath), new Path(localPath));

System.out.print(123);

}

Java代码 收藏代码

Running: INSERT OVERWRITE DIRECTORY '/tmp/query_ret/4' select userid from chatagret where 1 = 1 order by userid

[2010-07-15 20:36:43,578][ERROR][ULThread_0][ULTJob.java86]

java.io.IOException: Cannot run program "chmod": CreateProcess error=2, ?????????

at java.lang.ProcessBuilder.start(ProcessBuilder.java:459)

at org.apache.hadoop.util.Shell.runCommand(Shell.java:149)

at org.apache.hadoop.util.Shell.run(Shell.java:134)

at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:286)

at org.apache.hadoop.util.Shell.execCommand(Shell.java:354)

at org.apache.hadoop.util.Shell.execCommand(Shell.java:337)

at org.apache.hadoop.fs.RawLocalFileSystem.execCommand(RawLocalFileSystem.java:481)

at org.apache.hadoop.fs.RawLocalFileSystem.setPermission(RawLocalFileSystem.java:473)

at org.apache.hadoop.fs.FilterFileSystem.setPermission(FilterFileSystem.java:280)

at org.apache.hadoop.fs.ChecksumFileSystem.create(ChecksumFileSystem.java:372)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:479)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:460)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:367)

at org.apache.hadoop.fs.FileUtil.copy(FileUtil.java:208)

at org.apache.hadoop.fs.FileUtil.copy(FileUtil.java:199)

at org.apache.hadoop.fs.FileUtil.copy(FileUtil.java:142)

at org.apache.hadoop.fs.FileSystem.copyToLocalFile(FileSystem.java:1211)

at org.apache.hadoop.fs.FileSystem.copyToLocalFile(FileSystem.java:1192)

at com.huawei.wad.ups.platform.extinterface.entity.task.ULTJobService.generateFileHadoop(ULTJobService.java:707)

at com.huawei.wad.ups.platform.extinterface.entity.task.ULTJobService.jobProcessing(ULTJobService.java:165)

at com.huawei.wad.ups.platform.extinterface.entity.task.ULTJob.execute(ULTJob.java:79)

at com.huawei.wad.ups.platform.service.ultaskmgt.ULTaskHandler.run(ULTaskHandler.java:93)

at java.lang.Thread.run(Thread.java:619)

Caused by: java.io.IOException: CreateProcess error=2, ?????????

at java.lang.ProcessImpl.create(Native Method)

at java.lang.ProcessImpl.(ProcessImpl.java:81)

at java.lang.ProcessImpl.start(ProcessImpl.java:30)

at java.lang.ProcessBuilder.start(ProcessBuilder.java:452)

... 22 more

hdfs大小为0,可能是id不一致导致的,datanode没挂,把所有节点的current删掉,然后重启hdfs

楼主你好,从问题上来看你不能找到数据块,在你接下来的异常中涉及了inputstream文件流,很有可能是结点间的通信问题,也就是说联系不到某一个结点了

看一下你的路径对不对

电脑内存够吗?特别是C盘要有足够内存。

应该是节点的问题,可以把所有节点都删掉,然后重启试试

和你情况一样的 ,可以创建和删除目录,但是上传和下载都有问题. 希望楼主可以帮忙解决下

请问楼主解决了么

可以创建和上传文件夹,不能上传和下载文件,说明 namenode 没问题,datanode 有问题

大概率是因为 datanode 没有和主机映射 9866 端口,导致 java 从 namenode 拿到 datanode 信息后,无法访问到 datanode 结点

这个问题和我遇到的一样,也是使用 docker 搭建的 hadoop 集群

今天才解决,可以看看我这一篇,里边有详细的分析:

【Docker x Hadoop x Java API】xxx could only be written to 0 of the 1 minReplication nodes,There are 3