hadoop通过虚拟机部署为分布式,datanode连接不上namenode

使用hdfs namenode -format 进行namenode节点格式化,然后把配置好的hadoop发到其他两个虚拟机。

core-site.xml的配置:(fs.defaultFS配置的value是namenode节点的地址,三台都是如此)

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.216.201:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/mym/hadoop/hadoop-2.4.1/tmp</value>

</property>

启动namenode然后启动datanode,通过后台没有找到datanode,查看datanode的日志如下:

2018-01-29 15:06:02,528 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Block pool (Datanode Uuid unassigned) service to mym/192.168.216.201:9000 starting to offer service

2018-01-29 15:06:02,548 INFO org.apache.hadoop.ipc.Server: IPC Server Responder: starting

2018-01-29 15:06:02,574 INFO org.apache.hadoop.ipc.Server: IPC Server listener on 50020: starting

2018-01-29 15:06:02,726 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Problem connecting to server: mym/192.168.216.201:9000

2018-01-29 15:06:07,730 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Problem connecting to server: mym/192.168.216.201:9000

2018-01-29 15:06:12,738 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Problem connecting to server: mym/192.168.216.201:9000

2018-01-29 15:06:17,741 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Problem connecting to server: mym/192.168.216.201:9000

2018-01-29 15:06:22,751 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Problem connecting to server: mym/192.168.216.201:9000

2018-01-29 15:06:27,754 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Problem connecting to server: mym/192.168.216.201:9000

2018-01-29 15:06:32,760 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Problem connecting to server: mym/192.168.216.201:9000

2018-01-29 15:06:37,765 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Problem connecting to server: mym/192.168.216.201:9000

2018-01-29 15:06:42,780 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Problem connecting to server: mym/192.168.216.201:9000

2018-01-29 15:06:47,788 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Problem connecting to server: mym/192.168.216.201:9000

2018-01-29 15:06:52,793 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Problem connecting to server: mym/192.168.216.201:9000

2018-01-29 15:06:57,799 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Problem connecting to server: mym/192.168.216.201:9000

2018-01-29 15:07:02,804 WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Problem connecting to server: mym/192.168.216.201:9000

查看网络:

namenode机器上:

[mym@mym hadoop]$ netstat -an | grep 9000

tcp 0 0 192.168.216.201:9000 0.0.0.0:* LISTEN

tcp 0 0 192.168.216.201:9000 192.168.216.202:46604 ESTABLISHED

其中一台datanode机器:

[mini2@mini2 sbin]$ netstat -an | grep 9000

tcp 0 0 192.168.216.202:46604 192.168.216.201:9000 ESTABLISHED

已尝试的解决方法:

1.配置三台机器的hosts文件,且删除了回环地址。此时重新格式化namenode再进行测试。结果:没有解决

2.防火墙以及firewall都关了,用telnet 192.168.216.201 9000 也可以连接。仍然无效。

注:

1.datanode启动后没有生成current文件。namenode生成了current文件

2.使用的版本是2.4.1

3.使用jps分别查看namenode和datanode都可以看到启动了(估计datanode是启动失败的)

4.在namenode机器上启动datanode。后台可以查看到datanode

5.三台机器都配置了域名,且都能互相ping通

请求帮助

问题解决:在于datanode与namenode通信问题(linux用户问题使用不一致):datanode以自己的用户名去连远程机器的用户下的namenode,但是datanode是mini2用户名登陆,namenode是mym的用户名登陆,也就是说datanode去连接namenode时,有这样的scp:xxx mini2@namenodeIP xxx;而namenode的linux机器上根本没有mini2这个用户,并且namenode是在mym用户下的,所以肯定是连接不上的。这事自己给自己挖的坑,当初没有用相同的用户名。可能这个用户名登陆应该也是可以配置的,但是目前没有找到配置方法,个人解决方式,namenode和datanode使用同一个linux登陆用户名,密码也可以一致,然后最好配置免密登陆

把datanode上的/home/mym/hadoop/hadoop-2.4.1/tmp这个目录下的文件清空,在起送datanode试试

这是我做的配置 其中value改成你的配置 估计应该是你没有配置后面的大小

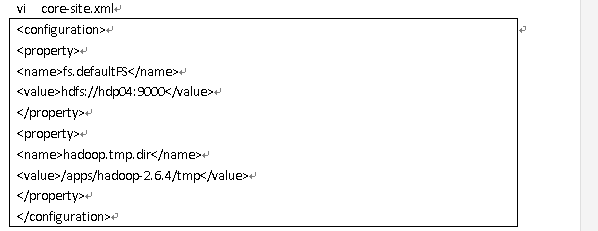

vi core-site.xml

fs.defaultFS

hdfs://hdp04:9000

hadoop.tmp.dir

/apps/hadoop-2.6.4/tmp

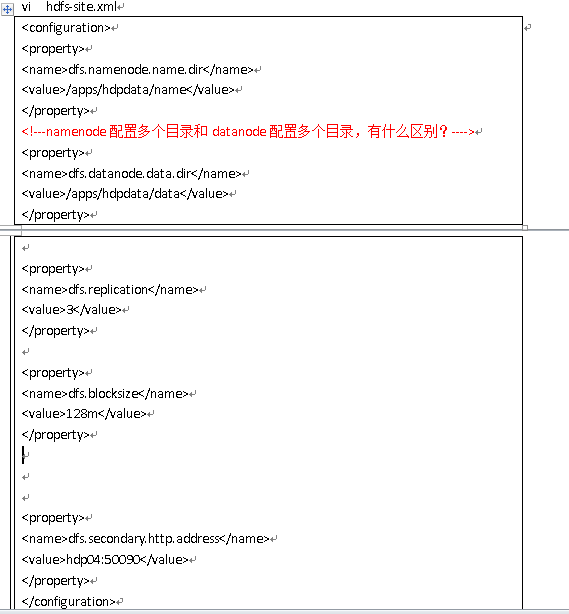

vi hdfs-site.xml

dfs.namenode.name.dir

/apps/hdpdata/name

dfs.datanode.data.dir

/apps/hdpdata/data

dfs.replication

3

dfs.blocksize

128m

dfs.secondary.http.address

hdp04:50090

vi salves

hdp01

hdp02

hdp03

分发到其他两台机器

Scp –r /apps/hadoop-2.6.4 root@hdp02:/apps

Scp –r /apps/hadoop-2.6.4 root@hdp03:/apps

value改成你的路径

然后在初始化试试