Jar在spark-shell上运行报错:主类找不到

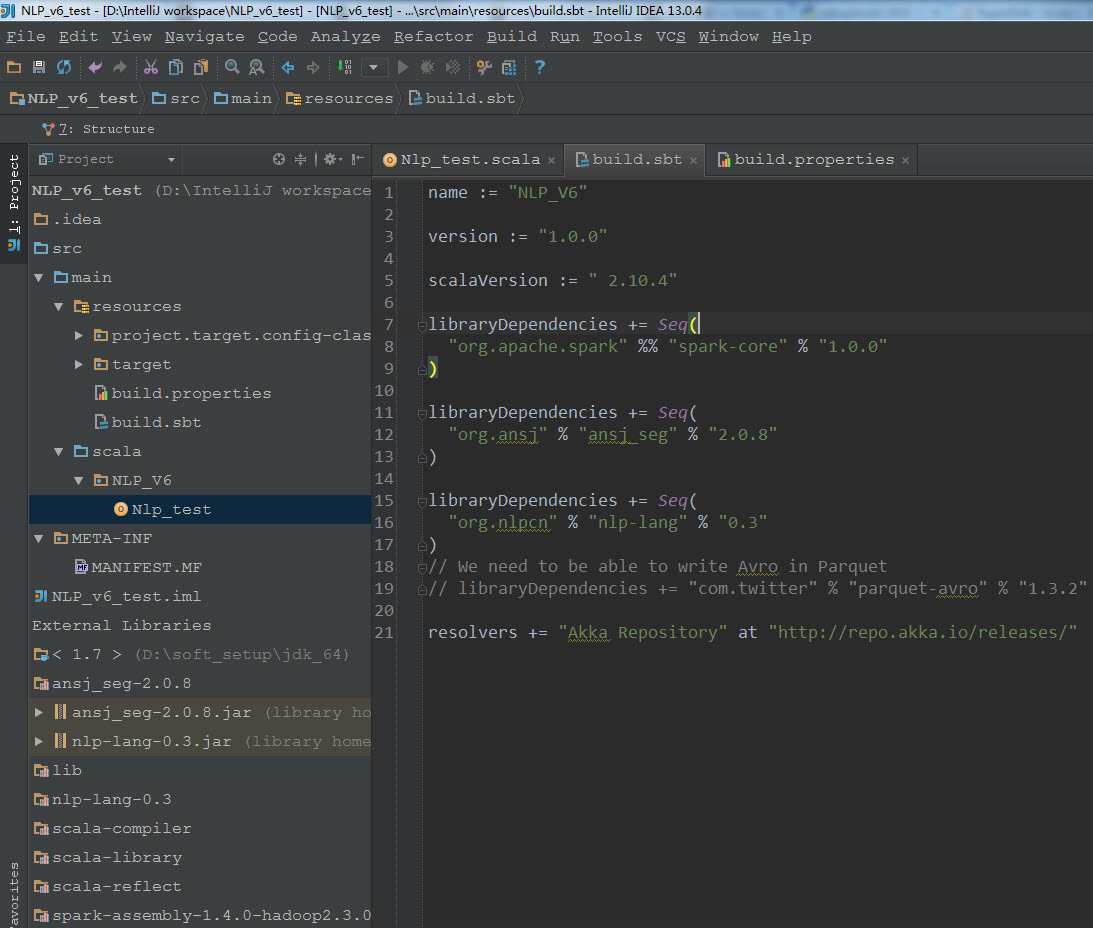

scala IntelliJ的项目,sbt打好包在spark-shell上运行后报错:主类找不到;使用了两个中文分词包(ansj_seg-2.0.8.jar,nlp-lang-0.3.jar),但是已经加入到 External libraries里去了;打包没问题,运行报错

spark-shell 提交命令:

[gaohui@hadoop-1-2 test]$ spark-submit --master yarn --driver-memory 5G --num-executors 20 --executor-cores 16 --executor-memory 10G --conf spark.serializer=org.apache.spark.serializer.KryoSerializer --class NLP_V6.Nlp_test --jars /home/gaohui/test/NLP_v6_test.jar /home/gaohui/test/NLP_v6_test.jar

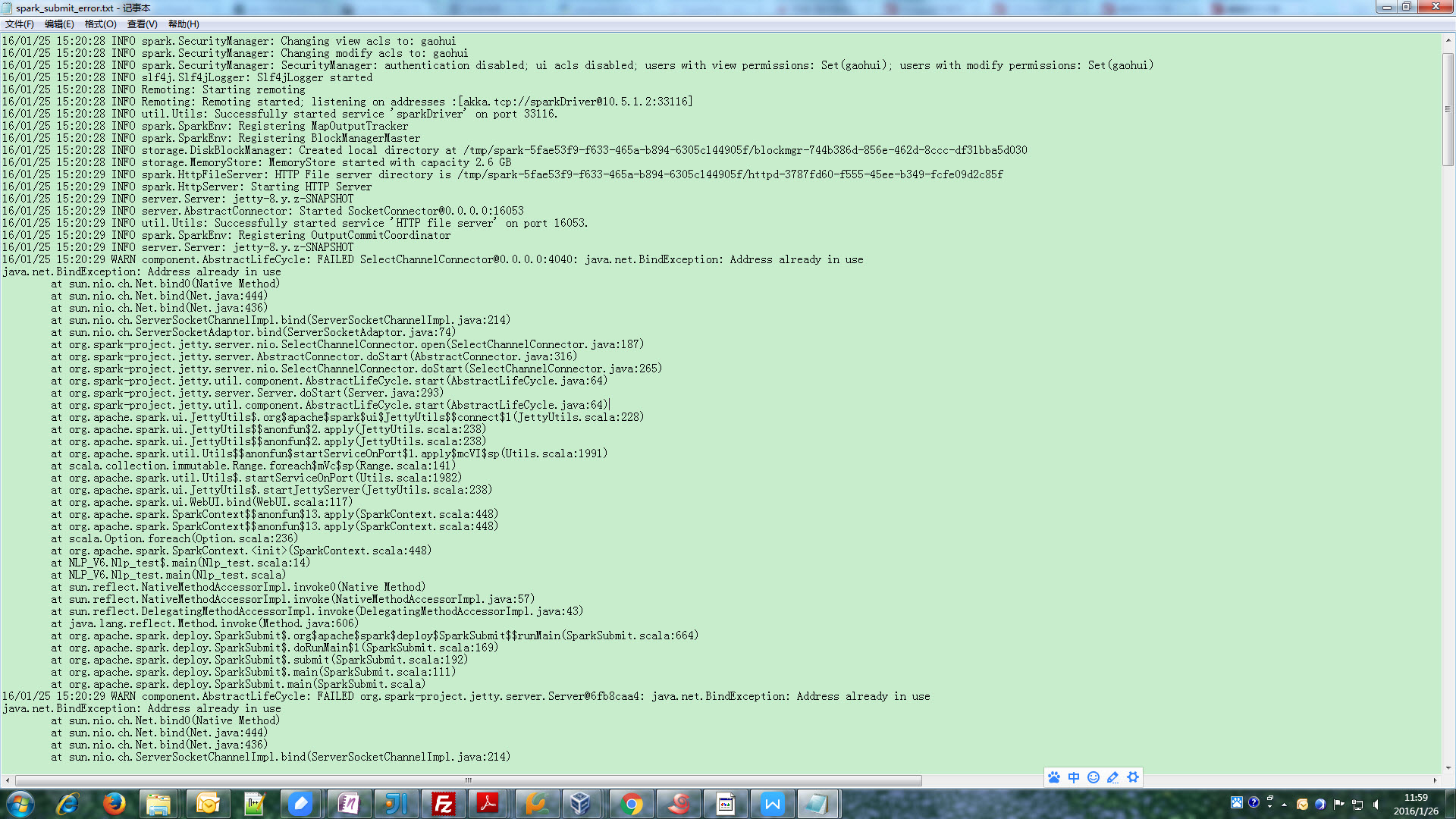

报错图片: